Here are some ways to help improve the call quality monitoring process, suggested by both Carolyn Blunt and our readers.

1. Make Mistakes Seem Easy to Fix

Giving feedback is a vital part of the quality monitoring process, but there is always a risk of upsetting advisors.

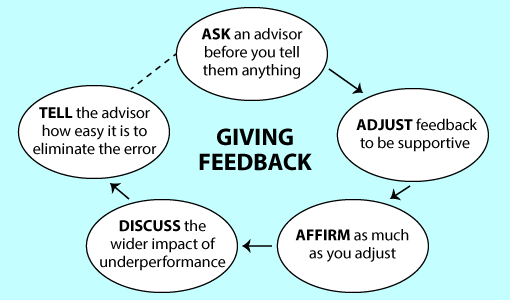

So, Carolyn Blunt from Ember Real Results gives the following pointers when dealing with this tricky situation:

- Ask their opinion before you TELL them anything

- With that opinion, adjust your feedback to be supportive – keeping it discreet

- Affirm as much as you adjust; don’t feel pressured to stick to the “praise sandwich”

- Discuss the wider impact of underperformance in quantifiable terms, e.g. this mistake costs the company £x each month

- Make the fault seem easy to correct; tell the advisor that it will be easy to eliminate this error if they just…

For more on this topic, read our article: How to Give Feedback to an Employee… Without Upsetting Them

2. Focus Team Leaders on Coaching not on Admin

A common issue in many contact centres is that team leaders simply do not have the time to monitor and give feedback to each advisor on their team, on multiple contacts every week/month.

So Carolyn advises removing administrative tasks from team leaders, scheduling 80% of their time to manage their team. This includes more time for quality monitoring as well as developing soft skills etc.

Carolyn Blunt

However, it is also important to think about how the time away from the contact centre floor is managed.

Carolyn worked with a client whose contact centre’s “two busiest days were Mondays and Tuesdays, and those were the days that their team leaders were doing a lot of reporting, meetings etc.

“So, the first thing we had to think about was how can we restructure this workload so that team leaders can be really visible at the most important of times.”

As well as organising meetings during peak periods, here are: 15 Scheduling Mistakes You Need to Avoid at All Cost

3. Avoid Tick-Box Scoring on Calls

A common bugbear for customers is that advisors sound robotic on the phone, yet call monitoring scorecards often encourage this.

As Carolyn says, “It disappoints me that certain things that have historically emerged as best practice in the contact centre industry. Things such as tick-box approaches to quality that have statement in them like: “did the advisor say, ‘is there anything else I can help you with?’ at the end of the call.

“The problem with that is if it gets to a point where the advisor doesn’t say it, they lose ten points on a spreadsheet and, in a lot of cases, it’s not necessary, or worse, a customer could say ‘well you didn’t even help me with what I originally phoned up for’.

“So think about giving advisors the freedom to decide the correct way to close this call and creating a holistic quality framework based on customer satisfaction, not being picky about certain parts of the advisor’s performance.”

For advice on how to create a scorecard that follows this logic, visit our page: A Beginner’s Guide to Balanced Scorecards

4. Introduce Self- and Peer-to-Peer Assessments

While it’s great for team leaders and call analysts to monitor calls, especially for calibration purposes, it can be of great benefit to the contact centre if there is an environment of self-learning and peer-to-peer discussion.

Carolyn suggests “giving advisors the space to say, ‘I want to go on that training course’ or ‘maybe I do need some help with this’, while not waiting for it to come handed on a plate to them.

“Also, peer-to-peer assessments are a really great way of getting advisors to learn from each other, and a major cultural consideration to think about.”

In addition, when in one-to-one monitoring sessions, Nick, one of our readers, suggests: “allowing advisors to do call monitoring on their own calls, then you can chat about their results versus your results.”

Follow the link to: How to Calibrate Quality Scores

5. Show Advisors What “Good” Looks Like

Despite encouraging the use of self-assessment, we are often asked: “Is it possible to achieve self-improvement through advisors monitoring their own calls?”

The answer, according to Carolyn, is yes, but only “as long as advisors know what ‘good’ looks like and there is no significant pay/reward at stake.

“Otherwise an advisor may think that their call was brilliant and actually it wasn’t, so it is important to understand what the gaps might be. Most people will, if they are encouraged and shown how to, be able to critique their own calls.

“Also, no bonuses, in terms of pay or otherwise, should be linked to self-assessment, because it is more than likely that advisors will not be honest with their scoring.”

6. Monitor the Call Directly After an Angry Customer Interaction

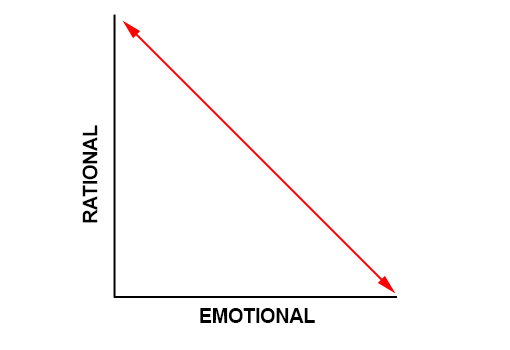

When monitoring an advisor who is handling a difficult call, it is good practice to monitor and analyse the next call after. This is because, as Carolyn says, “difficult calls can hook emotions.

“This is why advisors may need additional time to step back, to allow the rational brain to engage, as the more emotionally involved we get in a conversation, the more our rationality lowers,” as seen below.

So if advisors have not fully “unhooked” from the previous call, it could impact upon their performance in the next customer interaction.

If this is noticed in quality monitoring, it may be worth allowing advisors extra time for their “rational brain” to recover after dealing with an angry customer. This time should then be added to any shrinkage calculations that the contact centre makes.

Follow the link to find out: How to Calculate Contact Centre Shrinkage

Tips from our Readers

7. Don’t Cut Corners

- Quality assessment, coaching and feedback must be balanced, more than anything else. Mention the improvements needed, but, just as importantly, what the advisor did successfully.

- Integrity is vital, both personally and professionally. Stay neutral, whoever the advisor may be.

- Do not cut corners. If there are any hitches along the way, note this in the QA feedback.

- Make it clear to advisor colleagues that you do not know everything. You are there to learn, much as they are.

Thanks to Owen Simmons

8. Track the Feedback That You Give

I believe in giving feedback on daily basis, otherwise call monitoring loses its importance and advisors struggle to maintain motivation.

In fact, it can be useful to maintain a feedback tracker to monitor implementation of the feedback given to the advisors.

This is helpful, as analysts can appreciate the advisors who follow the given feedback. Also, analysts can further their efforts to help work advisors who do not show improvement post-feedback.

Thanks to Indra Edamadaka

9. Don’t Have Evaluation Criteria Based on Scales

Having scores based on one-to-five scales, or good, outstanding, etc., might create the perception that a four is just enough to “pass” the monitor, so the advisor will think: “well I don’t need to make any additional efforts to have a five, because a four is good enough!”

And, for reporting, it will be harder for a quality analyst to measure the results and determine root causes.

So, yes or no scoring is the better option, in my opinion, as long as the guidelines are clear!

Some may say that this will demotivate advisors, but the whole purpose of monitoring is to identify opportunities for improvement and to help develop agents, not to penalise.

Thanks to Diogo F.

What advice would you give to improve the quality monitoring process?

Please leave your thoughts in an email to Call Centre Helper.

For more suggestions on improving quality monitoring, read the following articles:

- 30 Tips to Improve Your Call Quality Monitoring

- Seven Deadly Sins of Call Quality Monitoring

- 36 Ways to Improve Call Quality Monitoring

- 15 Tips to Improve Quality Monitoring

- 10 Top Tips to Improve Your Quality Scores

Author: Robyn Coppell

Published On: 11th Sep 2017 - Last modified: 13th Jan 2025

Read more about - Customer Service Strategy, Call Quality, Carolyn Blunt, Feedback