There is a host of metrics for a contact centre to choose from. But what needs to be measured to ensure high performance?

Here are some key points that are important to remember when making this decision.

Metrics Should Cover These Three Fundamental Areas

The famous management consultant Peter Drucker once stated: “You can’t manage what you don’t measure,” a phrase that has been adopted by many in the contact centre industry.

However, there is so much that can be measured in the contact centre in terms of demand across channels, resource management and utilisation.

As Paul Weald, the Director at mcx, says: “Fifteen years ago, we would have been talking about efficiency-based activities, e.g. measuring call durations, aligned to an effectiveness measure, such as First Call Resolution (FCR) or conversion rates.

“Yet, over the past ten years, contact centres have started to take the customer experience much more seriously and therefore we have a much broader perspective of what’s going on in our contact centre.

“It’s now about three things: measuring what our customers want from us, measuring what our staff want from us and the wider organisational objectives, including finance and so forth.”

So, when making a decision over which metrics should be employed in the contact centre, it is important to ensure that each of the bases is covered.

Metrics Should Measure Customer Experience Across ALL Channels

To understand customer expectations and to measure the customer experience, it is advisable for an organisation to look at its own performance through the lens of its customers.

This includes performance across each of the contact centre’s channels, not just the over the phone. Paul Weald uses the example of why it’s important to measure the customer experience over social media, noting that it is a way “of getting to understand the sentiment that people have towards a brand, and also it is a complaint outlet.”

Also, Paul says that the contact centre “shouldn’t forget written communication, including white mail, as there is still a need to send information in the written form, particularly for any type of legal transaction, as well as email and webchat.”

But how do you measure the customer experience over all of these channels?

According to Paul, “it’s going to have to be some combination of internal quality measures, i.e. advisor quality assessments, mystery shopping, or it could be part of a Voice of the Customer (VoC) programme.”

The VoC programme is likely to include customer survey-based metrics, such as Customer Effort, Net Promoter Score or Net Emotional Value.

The Advisor Experience Must Not Be Forgotten

There have been numerous reports in customer service environments which suggest that achieving a high level of customer satisfaction relies on employee engagement, motivation and morale.

If you have that type of workforce in your contact centre, then you have a great ‘DNA’ to boost metric scores across the board.

Paul Weald

Paul Weald says that, “if you have that type of workforce in your contact centre, then you have a great ‘DNA’ to boost metric scores across the board.

“So, you need to measure the organisational culture, which is more than just an annualised employee survey.”

To do this, the contact centre should consider using an Employee Net Promoter Score (ENPS), which is generally calculated through a simple advisor survey on a quarterly basis.

There have been many success stories of organisations using this metric, such as PhotoBox’s contact centre in London. However, Paul stresses that the metric should be used:

- In an environment where information is shared and the results are acted upon

- To change the mindset of staff to understand “what good looks like” from a customer perspective

- In tandem with operational discipline – e.g. attendance, behaviour, etc.

For more on this topic, read our article: How to Calculate an Employee NPS

Measuring the Stakeholder Experience Is Also Important

While it may sometimes feel this way, the contact centre doesn’t exist in a vacuum, and it’s important that the costs and values are reported into the wider organisation.

So, while it is important to report the cost-per-contact, the call centre also needs to make sure that resources are being used appropriately.

Paul Weald says that on a human level, this can be tracked through measuring “advisor utilisation and occupancy, but also by making sure that advisors are focused on the right things and encouraging advisors to promote digital channels to certain customers.”

Paul also promotes the use of tracking repeat contact reasons which have derived from failure demand for the benefit of the wider organisation, as well as reducing future contact volumes.

He says that contact centres “need to recognise that the organisation is not perfect – it can always improve. And, I think you know that will manifest itself in in terms of a failure demands coming into your operation.

Paul Weald

“So, that’s where customers ring up or get in contact because something hasn’t happened. For example, if a delivery hasn’t happened, what you need to do, from a customer service perspective, is to resolve that issue there and then.

“But take a step back, and say ‘which visit caused that late delivery in the first place?’ And, ‘what could we have done differently?’”

To measure repeat contacts, one of our readers Emma suggests that if “a customer can call in on multiple lines, it is better to use a customer reference number.”

This if it is “possible to tag calls and other media channels or similar, otherwise you could miss repeat contacts.”

Should We Still Be Measuring Average Handling Time (AHT)?

There is no doubt that tracking AHT is still important from a capacity management perspective, in terms of scheduling and forecasting, but not as an explicit advisor measure.

Some contact centres still ask advisors to focus on ensuring that their calls are less than x seconds, so they could reach a certain service level with the available resources.

However, doing so can have a detrimental impact on service quality, with advisors rushing through calls. So, according to Paul Weald, the contact centre should focus on identifying ways to cut call durations and “getting staff on board without ever mentioning the dreaded three-letter acronym – AHT.”

For example, Mark, one of readers, analysed common on-hold reasons and examined how different advisors handled the call type.

He found different advisors were using different methods of gathering information from the contact centre systems. Mark then created cheat sheets to streamline the process for common queries.

To find out more tips for reducing AHT without pressurising advisors, read our article: 49 Tips for Reducing Average Handling Time

Measuring Digital Effectiveness Can Be Beneficial

With new contact centre channels coming into play, the industry is tasked with the question: what and how do we measure digital these days?

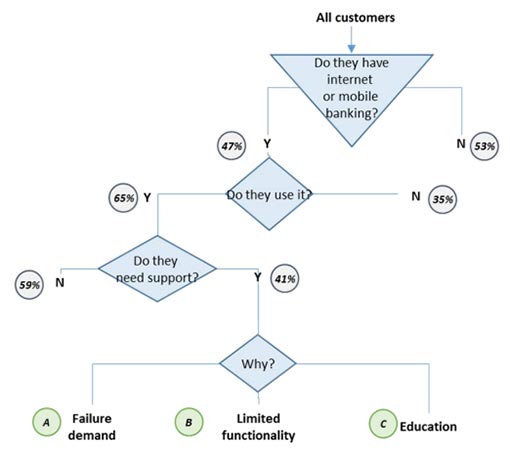

When faced with this question, Paul references a retail banking client who wanted to look beyond the statistics of how many users are registered for the service, delving into the scenarios around digital and mobile banking usage and the level of support required. An illustrative set of results show that self-service adoption is dependent on several of these factors.

This chart shows that self-service adoption is dependent on several factors

Three specific outcomes – failure demand, limited functionality and education – provided the insight for a number of different process-improvement initiatives that the bank could focus on.

For example, the contact centre could work with marketing to discuss when the right time was to promote the service. Is it now? For this particular client, it was better “to wait until they had some more releases, greater functionality, before they started to promote it.”

So, while this is not necessarily a specific metric, measuring and improving digital effectiveness is a necessary prerequisite to deflecting contact volumes through increasing self-service take-up, eliminating contacts related to failure demand and boosting the education of customers to use the service more transactions.

Don’t Be Too Strict on Service Level

The contact centre industry seems to fall back on the industry standard service level of 80% of calls answered in 20 seconds as a standard industry expectation.

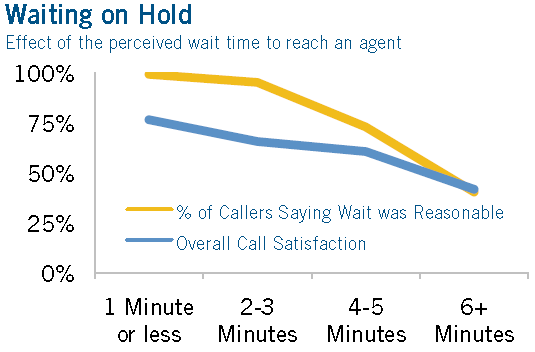

But when Genesys studied this “golden rule” more closely and asked their customers what they expected, the company found that customers were willing to wait longer, in fact up to two or three minutes longer, without it having a negative impact on Customer Satisfaction (CSat).

Another company found no correlation between wait time and CSAT for up to six minutes, which is an awfully long time – but it made no difference to the customer Net Promoter Score (NPS) or the actual resolution of the contact.

Vocalabs National Customer Service Survey: Banking

Drawing from this, Mike Murphy, an Account Executive at Genesys, concludes that “maybe we should relax around the 80/20 mark, as maybe it is not the right ambition to achieve.

“Clearly the things that are important are the customer-facing measures like the NPS.”

Mike Murphy

“In essence, the idea is if you drive for the 80/20 you’re putting stress on yourself and maybe, by doing that, you’re actually going to incorrectly route the call.”

“Ultimately, that will meet your measure, but you’ll probably get a poor customer a customer experience.”

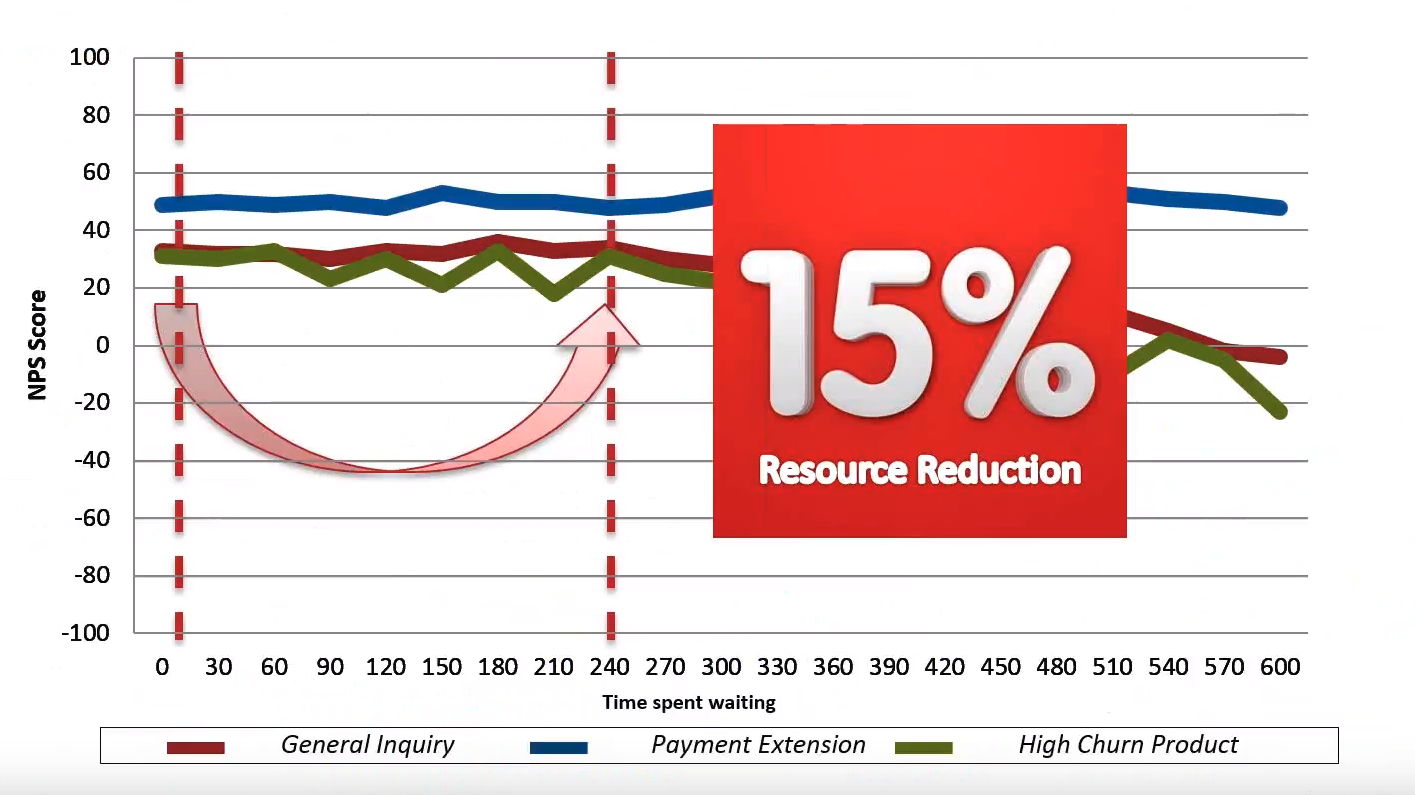

The following case study is based on research from a Genesys customer, who took this more relaxed view where, instead of thinking about this urgency upfront, they took the time to think about where the calls were being placed.

By taking this approach, the company was able to realise a drastic reduction in their resources, as highlighted in the graph below.

For more advice for best meeting your service level targets, read our article: 14 Best Practices for Maximizing Your Service Level

For more great insights into contact centre metrics, read our articles:

- 5 Important Call Centre Metrics to Improve Agent Performance

- 10 Metrics to Help You Measure the Customer Experience

- Which KPIs Do I Need for Contact Centre WFM?

Author: Robyn Coppell

Published On: 27th Nov 2017 - Last modified: 2nd Oct 2020

Read more about - Call Centre Management, Average Handling Time (AHT), Genesys, Key Performance Indicators (KPIs), Metrics, Service Level