We discuss how you can optimize your call centre quality assurance (QA) strategy, while also improving motivation, ensuring compliance and enhancing your quality key performance indicator (KPI).

Reset Your Call Centre Quality Assurance Focus

Classic questions that we are frequently asked regarding call centre QA include:

- How many calls and chats should I be monitoring each month?

- What should we do if an advisor pushes back against their QA scores?

- How can I increase advisor buy-in to our QA programme?

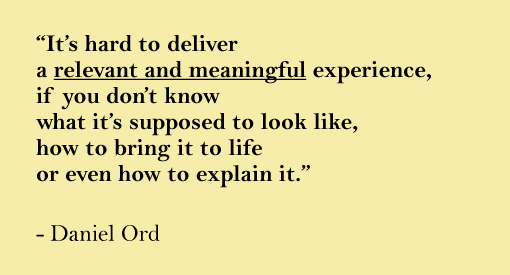

These are all important and relevant questions to ask. But these problems are “upstream problems”. There are things that you need to put in place before you approach answering these questions – according to Daniel Ord, Founder of OmniTouch International.

“When you are setting up your QA programme, the very first thing that you need to do is define what ‘quality’ means to you and your customers,” says Daniel.

When you are setting up your QA programme, the very first thing that you need to do is define what “quality” means to you and your customers.

QA needs to balance customer and business needs. There should be customer research that dictates what is included in QA scorecards and you should be testing those scorecards against customer satisfaction (CSAT).

Yet, this is rarely the case. Instead, when we ask contact centre professionals about how their QA standards have been developed, we will typically hear things like:

- “I don’t know. When I joined, they were already here.”

- “We did work on it a few years ago, but we haven’t done much with it yet”

- “We want to change, but we’d like to know what the process is to change”

Each of these answers suggests that the contact centres in question haven’t defined quality from the point of view of their customers. This can cause confusion when CSAT and QA scores don’t match up and can confuse advisors – as Daniel alludes to in the following quote.

So, define quality clearly and use your definition to create clear expectations for your team. This will solve a lot of those “upstream” problems further down the line.

Create a Service Delivery Vision

If you were to take ten advisors off the contact centre floor and ask them: what kind of quality service do we deliver around here? you will likely get lots of different answers.

If all of your advisors were to tell you the same thing, that’s a sign of a very good QA programme, as it shows that everybody is on the same page on how to best serve customers.

A service delivery vision can help you meet this goal, if you’re not there already.

This is not the same thing as a company vision. A company may guide your service delivery vision, but a service delivery vision is a statement – of no more than three sentences – that describes exactly what kind of service you deliver.

The following video, narrated by Daniel Ord, shows a great example of a service delivery vision and how it helps to set QA standards for customer service reps.

Daniel’s final point in the video is key: “What some people do is skip this entirely and they go to the process of selecting QA performance standards (scorecard criteria) and the behaviours that agents are going to bring to life.”

“But if you have a service delivery vision first, it is going to be a lot easier to pick those performance standards.”

Your customer research should come together into this vision, and it should be made clear to advisors as a statement but also included within your quality standards.

Know Your Call Centre Quality Assurance Scorecard Inputs

Instead of thinking about scorecard inputs or “performance standards”, you can think about advisor behaviours. What are the behaviours that you want advisors to bring to life?

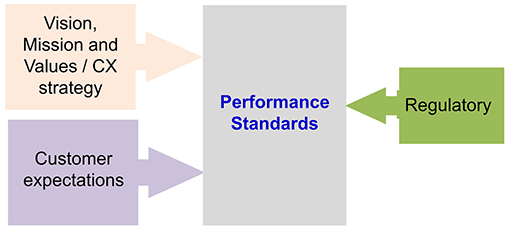

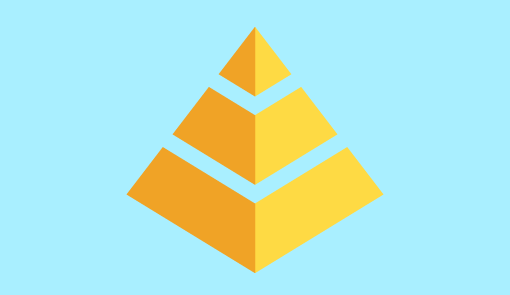

You can categorize each of these behaviours into three categories that are highlighted in the diagram below:

- Vision, Mission and Values / Customer Experience (CX) Strategy – What do you stand for as an organization? What is your service delivery statement? Behaviours that link to these topics will be included within this category.

- Customer Expectations – It is not enough to sit around the boardroom and say: “We assume the customer wants this or that,” you have to do the research into what your customers value most and what drives your CSAT scores. This will help you to better meet customer needs.

- Regulatory – This is where you ensure compliance, so the weighting of regulatory criteria will vary greatly between sectors. For example, if you’re in the financial industry, you’ll have some industry standards around vulnerable customers, so you’ll have some criteria in your scorecard regarding that topic.

These three categories can be useful to keep in mind when you are coming up with your QA inputs, to ensure that your overall scorecard mix addresses each and every one of these ideas.

You can almost think in terms of:

- Who are we?

- Who are they?

- What do the regulators require from us?

One final point to remember is, as Daniel says: “There is no magic set of performance standards; they do not exist. What works for a hospital is not going to work for a credit card company.”

It’s very important to take the time and effort to go through this exercise properly for your particular organization.

Understand Your Customer Experience Pyramid

The CX Pyramid is an interesting concept, as it shows how a customer evaluates their experience with you across three different elements.

The three elements of the CX Pyramid – which is a Forrester Model that was adapted from Elizabeth B. N. Sanders’ paper “CONVERGING PERSPECTIVES: Product Development Research for the 1990s” – are:

Enjoyable (Top Layer) – “I feel good about that.”

Easy (Middle Layer) – “I didn’t have to work hard.”

Meets Needs (Bottom Layer) – ” I accomplished my goal.”

You can apply the logic of meeting each of these three customer needs – as highlighted in the CX Pyramid – when determining your scoring criteria.

What relevance does this have to QA? you may ask. Well, you can apply the logic of meeting each of these three customer needs when determining your scoring criteria.

Daniel says: “I find it very useful, when I start to gather together all of my different performance standards, to filter them through this pyramid, to make sure that we are addressing the ‘meets needs’, the ‘ease’ and the ‘emotion’.”

This final point of planning what kind of emotional outcome you would like customers to have is something that’s gaining a lot of momentum within the contact centre space.

If you can define the emotions that drive value for you and work those into your service delivery vision, coaching and, of course, QA scorecards, you can start to evoke some really positive and beneficial emotions from your customers.

For more on the topic of emotion within customer experience, read our article: 7 Steps to Evoke the Emotions You Want From Your Customers

Remember, Not Everything Has an Equal Weight

Now that you have a batch of criteria, you are going to have to lay them out into a scorecard. But not every performance standard on your QA form should have equal weight.

For example, many believe that tone of voice matters more than what the advisor says in a greeting. You may also think that in a live chat, empathy will matter more than grammatical errors.

What “matters” will ultimately reflect whether your brand is customer- or business-orientated, as your CX vision performance standards, customer expectation standards and regulatory standards don’t need to make up a third of the scorecard each.

These weightings should signal to advisors the areas that matter most, so you are again setting the right expectations…

“These weightings should signal to advisors the areas that matter most, so you are again setting the right expectations,” adds Daniel.

Of course, you can’t change these weightings every day or every week, that would be very chaotic, but it is unlikely that you will get your scorecard balance right at the first try.

To assess the effectiveness of your QA scorecard, you should be looking for correlation between QA scores and other customer experience metrics and testing the scorecard with teams of analysts. This will also help to remove any scorecard subjectivity.

Also, as Tersea – one of our readers – suggests: “Revisit quality scorecards every time a new product is introduced, as a minimum, and make this action part of regular service planning.”

You’re Either Helping or You’re Keeping Score

When you are sitting next to an advisor and talking to them specifically about their interaction, you are doing either one of two things: helping them or keeping score.

If you have a form and there is a score on there, no matter how good a discussion you have with your advisors, they will only have one thought in their mind: what’s my score?

In addition, when you have a scoring system where 85% equates to a pass, if the advisor gets 86%, they are happy with that, they’ve passed. So, subconsciously, many advisors will feel like they don’t need to listen any more.

“Remember, when you’re holding up a score, you’re judging them. You’re taking a position of power and putting them into a subservient position,” says Daniel.

You’re not necessarily helping advisors by only giving them a score, you’re just telling them how they did on one particular call.

“It’s very difficult to coach to a score and, while people do need a gauge for how they are doing, you’re not necessarily helping advisors by only giving them a score, you’re just telling them how they did on one particular call.”

So, you need to see the power of both scoring and helping to ensure that you have focused QA sessions. You cannot focus the majority of your QA programme on scoring and then wonder why advisors are pushing back so much.

Handing somebody a scorecard is not a behavioural change dynamic, but helping someone on a regular basis is, and the more you help someone, the better they will score.

As our reader Renee recommends: “We don’t share scores with agents any longer and only coach on behaviours. Scores are used for helping us recognize learning opportunities.”

For advice on how to provide feedback in a way that helps your people, read our article: Contact Centre Coaching Models: Which Is Best for Your Coaching Sessions?

How Many Contacts Should You Be Monitoring?

Don’t worry about measuring enough calls to be confident of an advisor’s performance level across the month, because you are never going to manually monitor enough contacts to be statistically viable.

What you really need to do here is change the question and break it into two separate questions:

- How many helping sessions are you doing per month?

- How many scoring sessions are you doing per month?

Daniel Ord

This is according to Daniel, who says: “As a team leader, at a minimum, you should be talking to every agent that works with you at least once a week in a helping manner.”

“There is no keeping score here and if this target is unachievable, then I have a big question for you: where are you spending your time?”

Generally, when a team leader goes beyond this target – maybe even helping every advisor on their team once a day – that will tell in both QA scores and team camaraderie.

When it comes to how many contacts to score each month, please be careful of supposed industry benchmarks. Quality assurance programmes vary from scoring 6 contacts per month to scoring 15.

This raises the question, is it really fair to summarize an advisor’s monthly performance – where they take hundreds of calls – based on just a few contacts?

Well, Dan says: “No, it isn’t. But look at it more as an audit… You are auditing to find where the developmental needs are and also doing the helping, so then you have both sides of the coaching picture covered.”

Some More Ideas for Smarter Call Centre QA

Alongside the advice of Daniel and our readers, we have also gathered some insights from contact centre QA expert Tom Vander Well, who is CEO of Intelligentics.

Below are some of his suggestions for taking your contact centre QA programme to the next level.

1. Start with a one-time team assessment and set expectations from thereon out.

2. Gather CSAT data to drive what you measure in QA and how you weight it.

3. Assess situations driving customer dissatisfaction and factor that into your quality scorecards.

4. Have multiple scorecards for multichannel assessments – as what drives CSAT is different on each channel and criteria will have to change.

5. Assess customer experience outside advisor interactions (IVR, hold messages, queue lengths, etc.) and consider how these factors influence your quality scores.

6. Do an assessment of specific call types, including:

- Critical scenarios and how they are handled (try secret shopper model)

- Unusually long calls and what drives them

- Calls with multiple holds and transfers

Tom Vander Well

7. Assess customer interactions outside of customer support and try to embed these communications within your overall QA programme.

8. Between official QA assessments, “spot check” key behaviours, ensuring advisors are well aware of which behaviours are indeed key.

9. Focus energy where it will make the most impact – including newer and lower performers – but make sure you don’t single them out in front of everyone and don’t completely neglect your high performers.

For more great QA insights from Tom, read our article: Call Centre Quality Parameters: Creating the Ideal Scorecard and Metric

In Summary

Quality assurance begins with quality. You have to define what kind of quality you are going to deliver, and a service delivery vision can be a powerful way to do that.

Then, make sure you know your QA inputs and use a customer experience paradigm, like the CX pyramid, to ensure that you have accounted for needs, ease and emotion.

Also, to ensure that you measure the right things and set the right expectations, you need to weigh and test your scorecards.

With these scorecards, you can then consider how to balance your time between helping and scoring – recognizing the importance of both.

Good luck!

For more advice on creating a great contact centre quality assurance programme, read our articles:

- The Quality Problem: Good Advisors Stay Good – Average Advisors Stay Average

- 30 Tips to Improve Your Call Quality Monitoring

- How to Calibrate Quality Scores

Author: Robyn Coppell

Reviewed by: Megan Jones

Published On: 14th Aug 2022 - Last modified: 18th Mar 2024

Read more about - Customer Service Strategy, Call Quality, Daniel Ord, Editor's Picks, Quality, Scorecard, Tom Vander Well