Creating a quality scorecard is essential for maintaining high standards in your contact centre. A well-designed scorecard helps you evaluate customer interactions, ensure consistency, and identify areas for improvement.

To get you started we’ve put together advice on how put create the best call centre quality scorecard template in excel.

Plus, we’ve also provided a free excel template example to help you get started, ensuring your team delivers exceptional customer service every time.

Why Have a Quality Monitoring Scorecard?

Perhaps the most obvious reason for developing a call centre quality assurance (QA) monitoring scorecard is to measure advisor performance. However, having a good quality scorecard has a number of other benefits.

Martin Jukes, the Managing Director at Mpathy Plus, believes that having the right scorecard for your contact centre will also enable you to:

- Change advisor behaviour

- Ensure compliance with regulations

- Report quality as a key performance indicator (KPI)

- Measure the customer experience

- Motivate the team

- Assess the quality of individual advisors

So, how can we ensure that our scorecard provides us with each of these benefits?

7 Steps to Creating a QA Scorecard

We’ve put together a few simple steps to help you design a QA scorecard that effectively evaluates performance, ensures consistency, and helps improve your call centre’s customer service.

1. Decide What Needs to Be Included in the Quality Monitoring Scorecard

The first point that we need to make here is that measures, including quality scorecards, need to be specific to your organisation.

At the most basic level, working in a service environment is very different from working in a sales environment, and you therefore have to measure different things.

“You have different measures for different purposes, so it’s important to focus on what’s right for your organisation.

Ask yourself: what are the objectives of measuring quality? Then, make sure that your scorecard measures those things.” – Martin Jukes

So, think about how you can link your customer service strategy to your scorecard:

- Do you have compliance issues that need to be considered?

- Are you trying follow a process?

- Do you want to measure specific soft skills?

As Martin reminds us, “Every organisation will have individual requirements to meet its individual needs, so elements included in the scorecard should be bespoke to your own customer and business needs – so you measure what’s important.”

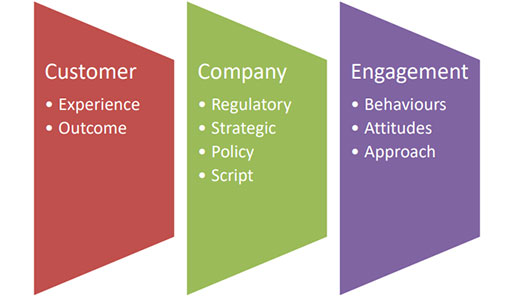

These elements can be categorised into three key areas: customer, company and engagement. Each element is then weighted differently, depending on its importance.

2. Apply the Three Key Areas of Quality Assurance

We derive each of the elements included in our scorecards from the key areas below. Each is accompanied by some advice for choosing what is relevant to measure.

Customer

Think about what’s important to the customer.

Create focus groups to find out what matters most from their perspective and find objective ways to measure that. Is it getting the right outcome? Advisor courtesy? Minimising effort?

Some examples of customer criteria to include in the QA scorecards:

- Did the advisor address the customer in the way they would like to be addressed?

- Did the advisor answer all the points in order of importance to the customer?

- Did the advisor show empathy by reflecting back their experience?

Company

Are you meeting regulatory perspectives? What about wider organisational goals?

Gather the senior members of the contact centre team and ascertain your business needs. This will enable you to create and add criteria that support your company’s objectives.

Some examples of company criteria to include in the call centre quality monitoring scorecard:

- Did the advisor read the compliance statement in the script at the start of the call?

- Did the advisor notify the customer of all the relevant documentation?

- Did the advisor offer transaction confirmation?

Engagement

This is a more subjective area of behaviours, attitudes and the approach of the advisor in helping to deliver good customer service.

While the “customer” criteria dealt with what needs to be said, this take a closer look into the advisor’s communication style and assessing whether it was right for that particular customer.

Some examples of engagement criteria to include in the scorecards:

- Has the advisor used effective questioning skills?

- Has the advisor used reflective listening to show understanding of the customer’s issue?

- Has the advisor taken ownership of the customer’s problem?

So, these are the three key areas of QA, but they do not necessarily all have the same level of importance. That depends on the nature of your organisation.

3. Add Weighting to the Contact Centre Quality Monitoring Scorecard

Many contact centres will give each of these three areas equal weighting, considering them to have equal value, so they will each make up for a third of the overall quality score.

However, certain industries may give higher value to the “company” section of the scorecard, as compliance is a key objective. These industries will likely include sectors such as banking.

On the other hand, some industries – which may include sectors such as retail – will be more focused on meeting customer expectations and will have little in the way of regulations to take into account. These industries will give higher weighting to the “customer” and “engagement” areas.

But what about the individual elements within each of these three categories? Should they have the same weighting?

No is the answer, because we can go back to our customer focus groups and ask them what they consider to be the most important factors in good customer service. This research will inform us about the elements that should be given a higher weighting in the “customer” and “engagement” areas.

In terms of the weighting of the “company” criteria, this is more simple. Gather you senior management and discuss how important each element is and how it should be weighted.

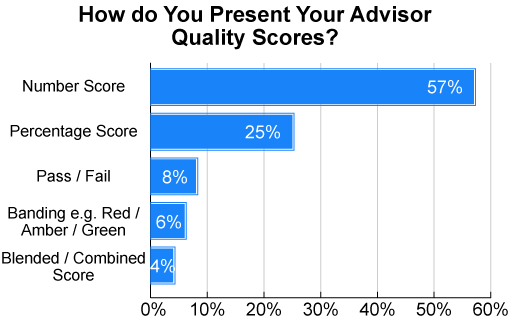

4. Decide How Customer Service Advisors Are Scored

There are a number of different choices for how you score an advisor’s performance, whether that is through yes or no tick boxes, a red/amber/green system or a numbered scale. So which is the right option for you?

“The best method to use is likely to be a combination, as there are questions which are quite binary – where you can say yes or no – yet there are also questions which are more subjective and will likely require a numerical score.” – Martin

This may make the maths part a bit trickier, but if you use tick boxes for a subjective question like “Has the advisor used effective questioning skills?”, it can be difficult to answer yes or no.

For example, maybe the advisor used one questioning skill well but it was not the best option. Or the advisor might have chosen the right skill but could improve their delivery.

So, to ensure analysts are scoring advisors evenly, it is good to have a combination of scoring scales and run calibration sessions as well.

Don’t Forget to Include an N/A Option

Not every element of a quality scorecard is applicable or appropriate for every contact, so N/A (Not Applicable) is a valid entry in some cases, according to Martin Jukes:

“There are two key scenarios when the analyst can tick the N/A box: where there are inappropriate rules or irrelevant questions.

An example of when there is an inappropriate rule could be when the customer says that they want to be passed right through to their Account Manager. In this short and simple transactional call, it would be inappropriate for the advisor to say the customer’s name three times, which could be part of your quality criteria.

In terms of an irrelevant question, let’s use the example of a customer closing their account. In this scenario, it would be pointless to ask ‘is there anything else that I can help with?’ at the end of the call, which could again be part of your quality criteria.”

So, it’s a good idea to include an N/A box next to each question on the scorecard. If it’s ticked by the analyst, we can then exclude the entire question from our final results, so that it has no impact on the advisor’s overall score.

5. Test Your Scorecards Effectiveness

Once we have created a scorecard, bring a team of people together and score the call. Then, have a discussion over whether the score is an accurate representation of what happened in that contact. Does it feel right?

If not, why doesn’t it? Is the scorecard missing a key couple of questions? Are you giving too much value to certain elements on the scorecard?

By asking yourself these questions and listening to a number of different contacts, you’ll be able to identify areas in which you could be giving the advisor too much credit or find elements that need to be given a greater weighting.

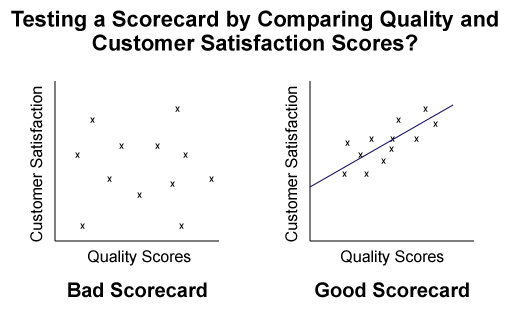

Once you have done this, you are ready to take a more scientific approach to testing your scorecard, which involves investigating the relationship between your quality and CSat scores.

Compare With Customer Satisfaction Scores

You want to score an advisor’s performance through the eyes of the customer, so you gain the best possible measure of the customer experience. Therefore, theoretically, the better your quality scores, the better your CSat scores.

So, trial your scorecard against contacts where the customer has left a CSat score, which most contact centres attain by asking the customer the following question after their contact: “On a scale of one to ten, how satisfied were you with the service provided?”

Then, create a scatter graph where one axis denotes the CSat score and the other denotes the quality score. For each contact for which you have both a CSat and quality score, plot an “x” on the graph where applicable.

This scatter graph will allow you to test the effectiveness of your scorecard, as if you can find a line of best fit, you know that you have produced a scorecard that successfully mimics the viewpoint of your customer base.

But remember to create separate graphs for each of your different scorecards and for your different channels. Otherwise, little to no correlation is guaranteed.

6. Hold Calibration Sessions to Help Remove Subjectivity

Calibration sessions are meetings where all your quality analysts gather together and listen/read the same contacts before creating individual scorecards.

The analysts will then compare scorecards with one another, and if there are any discrepancies, look to form a mutual understanding of how each element should be scored.

In addition to this, Reg Dutton, Client Manager at EvaluAgent, suggests that you “include advisors in your quality calibration sessions and get them to share their ideas, perceptions and thoughts around quality and how customers should be treated.”

“Not only can advisors have some great ideas that will help you tighten up your quality guidelines, you will be engaging them with quality and demonstrating what you are doing to make the process as fair as possible.”

To find out how you can run the best possible calibration sessions, read our article: How to Calibrate Quality Scores

7. Use Your QA Scorecard

You need to make sure that quality scorecard data is easy to share with advisors. Then, as Martin tells us: “You should be able to use the data to identify training needs, review overall performance and present positive and negative findings.”

One good practice that the DAS contact centre in Caerphilly uses, in regard to sharing contact centre data with the team, is to feedback the entire scorecard, not just the score, to advisors with simple, but effective, additions.

These additions include comments like “nice”, little well dones for extra recognition for things that the advisor did really well, and a recording of the contact, so advisors can listen back to the call for themselves. This helps to give the analyst’s comments greater context.

Identifying Trends in Advisor Performance

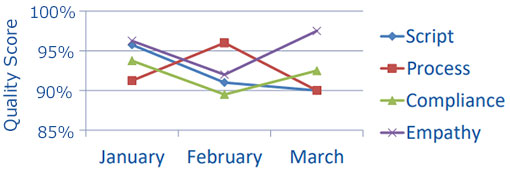

To track the behavioural trends that are taking place in the contact centre, it is good to track the progression of quality scores at an individual and team level.

“It’s easy to fix poor trends through reinforcement, but getting to the point of the cause of behavioural change is what will really make the difference longer term to the organisation’s quality performance.” – Martin

Also, this analysis can really help you to gauge the impact on performance of any changes that you make to the contact centre. For example, when you bring in a new technology, has it helped to boost advisor performance?

At an individual level, tracking advisor quality scores will enable you to highlight advisors who deserve reward and recognition, while also providing insight into whose performance maybe dwindling. Once you know this, you can find out why and better support them.

Then, there are certain elements of an advisor’s performance that you can take a closer look at. Breaking up the scorecard and looking at individual elements, such as the progression of compliance, script adherence and certain soft skills, can be a useful exercise.

Example QA Scorecard Template in Excel – Free Download

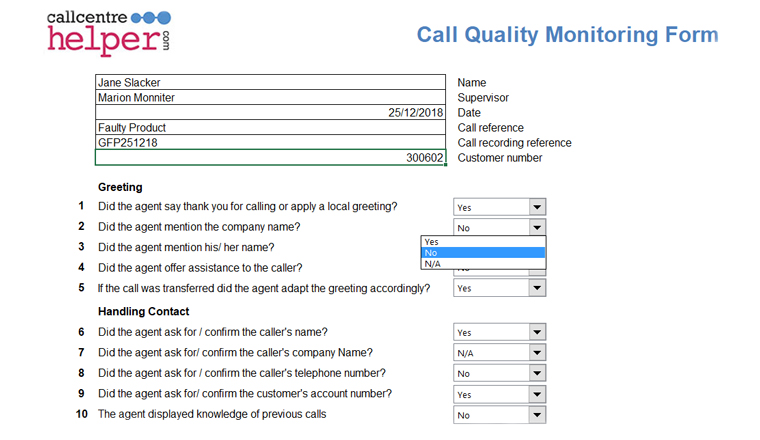

We have an example of a quality scorecard, which you can download for free and modify to use for yourself.

The scorecard shows how a combination of scoring techniques can be used together (both tick boxes and numbered scales), while it also includes questions from each of our three key areas: customer, company and engagement.

However, please remember that this scorecard has been developed for the voice channel only and that it should be tested thoroughly, using the advice above, before it is implemented in your contact centre.

Get your free download of the Excel Call Monitoring Form.

Two Final Considerations for QA Scorecards

Don’t Use the Same Scorecard Across Every Channel

There are different rules of engagement across different channels, as the type of conversation from one channel to the next is completely different, according to Martin Jukes.

“It’s really important that the difference in the nature of conversation between channels is reflected in the questions that you ask, as customers have different levels of expectations across different channels.” – Martin

In fact, if you are using the same quality scorecard across different channels, it is likely that quality scores will vary drastically from one channel to another and that quality will not reflect Customer Satisfaction (CSat) scores on certain channels.

This is a problem because making sure there is correlation between quality and CSat scores is important, as you want to ensure that you are measuring what is in the best interest of your customers.

Remember to Assess Quality of Automated Channels Too

There are a number of emerging channels – such as mobile apps, automated phone menus and self-service bots – which don’t actually use an advisor. However, this doesn’t mean that they are exempt from your quality programme.

Martin says: “It’s important to think about the quality of all channels, as opposed to focusing solely on the advisors. We must think about quality beyond the advisor level.”

In terms of assessing quality on channels that require no human interaction, let’s consider the IVR self-service. It is unlikely that your contact centre has updated the messaging/structure of the IVR in a long time. Does it still fit your brand? That’s something that you need to test.

Then there are the processes that advisors can do nothing about but which have a strong influence on call quality. These include things like:

- How easy is it to access the necessary information?

- How long are queue times? Are they impacting CSat?

- Have we continually coached advisors enough to have the appropriate knowledge?

When scoring advisors, we need to be mindful of what they can and cannot control, in order to make the process fair.

So it’s good for the analyst to make a note of anything that they feel inhibited the advisor’s performance and for you – as the contact centre manager – to listen out for any recurring problems.

For more on quality management in the contact centre, read the following articles:

- Call Centre Quality Parameters: Creating the Ideal Scorecard and Metric

- The Quality Problem: Good Advisors Stay Good – Average Advisors Stay Average

- 30 Tips to Improve Your Call Quality Monitoring

Listen to Our Podcast

Find some additional ideas for improve quality in the contact centre by listening to the following episode of The Contact Centre Podcast, which features a conversation with QA Expert Martin Teasdale.

The Contact Centre Podcast – Episode 8:

How To Extract More Value From Your Call Center Quality Programme

For more information on this podcast, or alternative ways to listen, visit Podcast: How to Extract More Value From Your Contact Centre Quality programme

Author: Charlie Mitchell

Reviewed by: Robyn Coppell

Published On: 6th Mar 2019 - Last modified: 11th Dec 2024

Read more about - Customer Service Strategy, Call Handling, Call Quality, Charlie Mitchell, Editor's Picks, EvaluAgent, Martin Jukes, Martin Teasdale, Quality, Scorecard