Here we take you through how to create the ideal contact centre quality scorecard, while giving tips for creating a measurable quality score metric.

Assess What Matters to the Customer

When creating a quality scorecard, it is important to start by asking two simple questions:

- What matters to the customer?

- What matters to the business?

This is according to Tom Vander Well, President/CEO of at c wenger group, who says that: “One thing that many contact centres fail to do is really consider what’s important to the customer, or they make assumptions about what’s important.”

I always encourage contact centres to start with a Customer Satisfaction (CSat) survey, because what matters to customers will vary from market to market and company to company.

Tom Vander Well

“So, I always encourage contact centres to start with a Customer Satisfaction (CSat) survey, because what matters to customers will vary from market to market and company to company.”

These CSat surveys could be used in customer focus groups and can include a simple question such as: “What do you think good customer service looks like?” From the responses, the contact centre can start to form a good idea of which advisor behaviours are most important to customers.

Tom gives the example of “if you found that courtesy and friendliness were the biggest driver of customer satisfaction, then you should really focus your scorecard, and the weighting of your scorecard, on these behavioural elements.”

Other ways to gather customer expectations and to find out what matters most to them, besides focus groups, include mystery shopping and running a “Chief Executive for the day” initiative.

Assess What Matters to the Business

In addition to assessing what matters to the customers, senior members of the contact centre team should gather to discuss what matters to the business, e.g. compliance.

Then, it’s important to consider the weighting of the scorecard. While most are more heavily focused on meeting customer expectations, contact centres in certain industries, such as banking, will be more likely to have an increased focus on meeting business needs.

We discuss how to ideally weight a quality scorecard, in the “Create a Measurable Quality Score Metric” section of this article.

Define What’s Important and Create Criteria

Once the contact centre has defined what is important to the customer and the business, it is time to put together the scorecard.

This can be tricky, and contact centres are faced with two options: create a very specific, activity-based criterion or produce a more generic criterion that is backed up with regular calibration sessions.

Each of these options has a drawback and requires a significant amount of time and effort to perfect.

Option 1 – Be Very Specific (Activity Based)

Option one would be to present analysts with a list of activities that represent an idealised behaviour, which are relatively straightforward to answer yes or no to, depending on whether or not the advisor did that activity.

As an example of very specific criteria, Tom Vander Well gives a generic list of the components that make up a good contact centre greeting, which analysts can simply respond yes or no to:

- Use “hello” or similar salutation

- Identify self

- Identify department

- Use “may I help you” or a similar inviting question

The problem – Justin Robbins, contact centre and customer service expert, identifies an issue with this method and cites a recent visit to a contact centre that uses this method of scoring advisors as an example.

Justin Robbins

At this contact centre, Justin asked the team: “What do you really want the advisors to do?” They said that “we want them to build a relationship, we want them to build rapport with the customer.”

So, in response, Justin said: “What if you give advisors the freedom of saying what we want you to do is build a rapport and we will judge, through the quality process, how well you do that. This is rather than using this activity of using the customer’s name three times, which may not do anything to build rapport.”

This experience cemented Justin’s belief that quality frameworks should be based on results and not activity; however, this isn’t necessarily the most efficient method.

The fix – While taking Justin’s opinions on board, contact centres should not necessarily skip ahead to option two, as this method can be optimised through great attention to the weighting process and testing, as explained later.

This is a screenshot of our call quality monitoring form, with clearly defined criteria

Option 2 – Ask Generic Questions (Results Based)

Option two would be to ask analysts generic questions that focus on results more than activities, with analysts simply responding yes or no, as before. For example, an analyst would be asked to tick yes or no to questions such as:

- Did the advisor successfully greet the caller?

- Was the advisor successful in building rapport?

- Did the advisor present all possible options to the customer?

The problem – The problem with asking these vague questions is that analysts can interpret each element in opposing ways, which can lead to suggestions of unfair treatment from advisors.

For example, Tom notes that he recently came into a contact centre that asked analysts to tick yes or no in response to the question: “Did you delight the customer?” Yet this is a question that is unlikely to have a clear answer.

Another example would be a question like: “Did the advisor answer the question?” But Tom points out that “the advisor may have answered the question, but perhaps not correctly, clearly or concisely. Or maybe the advisor correctly answered one question but didn’t answer another. So, in this scenario, does the advisor deserve credit or not?”

Tom Vander Well

Also, Tom says that results-based quality assurance (QA) can be problematic if the “analyst is determining the quality of the advisor’s performance based on their own perceptions of the outcome, and not on defined criteria based on quantifiable customer survey data.”

“I’ve experienced many an internal QA analyst who judged a call by saying, ‘If I was this customer…’ and then scored the advisor’s performance according to their personal judgement as an internal supervisor or QA analyst. But they are not the customer.”

“It’s easy to let personal preference or insider knowledge skew the opinion. Better to say, ‘Our customer survey/research data reveals that ‘x’ is a key driver of satisfaction, therefore I know that the CSR doing ‘y’ will positively impact the caller’s perception of ‘x’.”

The fix – The answer to this issue would be to run regular calibration sessions, to ensure that each analyst goes through the same thought process when determining an answer to each question.

While calibration sessions would also be helpful when choosing option one, it is much easier to spot activities as opposed to results.

It will be likely that different analysts will have opposing opinions of what equates to a “yes”, so make sure that in calibration sessions a document is created with guiding principles to remove subjectivity.

Tip – Remember Less Isn’t Always More

Most companies want to drive the scorecard towards fewer questions, thinking it’s easier because ‘we only ask three questions’, for example. There was one contact centre that thought this and only asked:

- Was the customer happy?

- Did you represent the brand?

- Did you answer their questions?

Most contact centres have a scorecard of 30-50 questions/criteria, but it varies across industries.

Tom Vander Well

Unfortunately, this again brings about the issue that there’s a million ways that you could determine whether or not the analyst could respond with a yes or no.

So, it is important to take many more elements into consideration, with Tom adding that “most contact centres have a scorecard of 30-50 questions/criteria, but it varies across industries.”

Create a Measurable Quality Score Metric

Once a set of criteria has been created, it is time to decide how each element is weighted, in the interest of tallying a metric score that can be measured and tracked.

Ask Analysts to Remove Elements of the Scorecard That Weren’t Relevant

Firstly, the contact centre should think about how analysts score criteria that might not apply to every call. The call audit parameters should they give the advisor credit anyway or simply remove that element from the scoring?

As Tom Vander Well says: “One of the most common problems that I find is that people will stock their scorecard with behavioural elements, even though many of them won’t apply to certain conversations. So, what happens is that analysts basically give credit to the advisor for all the elements of the call that weren’t there.”

However, Tom argues that elements that do not apply to the call should just be removed from consideration, as it creates a false overview of an advisor’s performance.

To highlight this Tom gives the following example: “Say a contact centre has a scorecard that is made up of ten questions, all of equal weight, but only five of those questions were relevant to the call. During this call, the advisor successfully fulfils the ‘needs’ of four of those questions, so the analyst scores the advisor 90%, when in actuality 80% would have given a greater perception of reality.”

So, it could be worth adding a N/A section to scorecards, to ensure that an irrelevant element is removed when calculating a percentage for quality scores. Or try using different quality forms for different types of interactions, as well as different channel types, to increase the metric’s focus and call quality parameters.

This tip for changing quality forms was suggested by Justin Robbins, in our article: 30 Tips to Improve Your Call Quality Monitoring

Weighting the Scorecard

A bugbear of Tom’s, when it comes to quality, is that some companies will often weight everything to have the same significance on a scorecard. For instance, if a contact centre that does this has ten things on its scorecard, then each would be accountable for 10% of the overall service score.

You need to revert to the satisfaction research and give the matters which mean the most to customers greater weighting.

Tom Vander Well

However, as Tom says, “Whether you identified yourself in the greeting is less important to the customer than whether you ultimately resolve their issue.”

“So, this is where you need to revert to the satisfaction research highlighted earlier and give the matters which mean the most to customers greater weighting.”

Also, it is important to again consider if it would be more beneficial to the organisation to have the scorecard more customer-orientated as opposed to business-orientated. If the organisation were to feel this way, but has more business elements on the scorecard, it is important to add greater weighting to the customer elements to accommodate this customer emphasis.

However, it is unlikely that the contact centre will get this balance right at the first time of asking. So, testing the scorecard is important. This can be done in the two ways shown below.

Testing the Scorecard

There are two commonly used methods of assessing the effectiveness of a contact centre’s quality scorecard, each of which is highlighted below.

Regression Analysis

“Regression Analysis” is a research/survey technique for testing and determining which dimensions of service have a greater/lesser impact on overall CSat.

This is according to Tom Vander Well, who says regression analysis works by “asking customers, in a post-call survey, of their satisfaction on a number of different service dimensions.”

“Then, the next stage would be asking the customer of their overall CSat with the company/experience, which enables you to derive the relative importance of the various dimensions of service in driving the customer’s overall satisfaction. The results can then be used to weight QA elements appropriately.”

“For example, if “resolution” is of greater importance in driving CSat than ‘courtesy and friendliness’, you can weight the “resolution”-related elements of the scorecard more heavily in the calculation of the advisor’s performance than the soft skill or “courtesy”-related elements.”

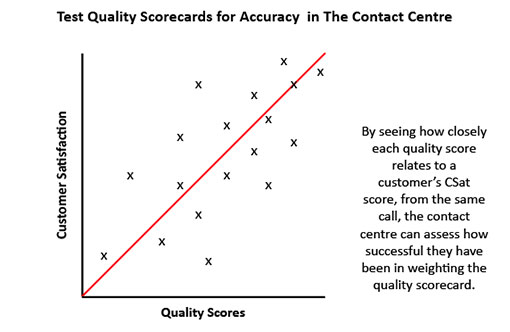

After doing this, the relationship between quality and CSat will hopefully improve, and if you inspect the relationship between a quality score and a CSat score from the same call, while analysing multiple interactions, the correlation between the two metrics will likely improve. Take the graph below as an example.

For more on measuring CSat, read our article: How to Calculate Customer Satisfaction

Team Scoring

This method is a little more anecdotal, but if you get a team of people together, including quality supervisors, and score calls together, you can gather great insight.

This is according to Tom, who gives the example: “If you score a call as 90 out of a 100%, which on our scorecard we would consider to be good but not great, does 90 really reflect what happened in the phone call?”

“If it was a lot worse than that and everybody agrees that it should really have been a score of about 80%, well then, why wasn’t it? What’s the problem with this scorecard? Maybe we’re giving too much credit to an advisor in one area or something needs to be weighted more heavily, so that it has a greater impact if it is missed.”

Tip – Train Analysts Too!

Quality monitoring can be a great tool for coaching advisors, but Tom argues that analysts should also be trained, so they can bring benefits to the contact centre during these quality sessions.

Tom says something that most organisations miss out on is “training their analysts to listen for more than just what the advisor said or didn’t say, because every contact is an opportunity to ask yourself the question ‘what didn’t work for the customer in this experience?’.”

“Oftentimes, advisors are marked down for things that are outside of their control, such as struggling to find information and placing the caller on hold three times. But was this because the advisor didn’t know what they were talking about or was it that management had not provided the advisor with the information the customer was asking for.”

“So, too often quality is focused on ‘you bad advisor, you sounded like you didn’t know what you were talking about and you placed the caller on hold three times and provided a bad customer experience’. But the reality is that it’s management’s fault for not anticipating the customer might ask that question and not providing that advisor with relevant information that’s easily accessible.”

Find more of our advice for putting together a great quality programme in our articles:

- Call Centre Quality Assurance: How to Create an Excellent QA Programme

- 11 Tips and Tools to Improve Call Centre Quality Assurance (QA)

- How to Create a Contact Centre Quality Scorecard – With a Template Example

Author: Robyn Coppell

Reviewed by: Megan Jones

Published On: 4th Apr 2018 - Last modified: 23rd Jul 2024

Read more about - Customer Service Strategy, Call Quality, Customer Satisfaction (CSAT), Metrics, Quality, Scorecard