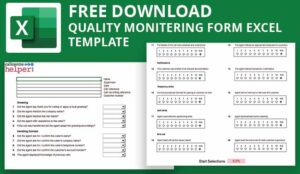

Call Centre Helper has produced a free and downloadable Excel Quality Monitoring form, that can be used as a call quality monitoring scorecard or as a call audit template.

We’ve been busy working on our call quality monitoring form to make it easy to adapt, and be able to include both yes/no-type questions and rate-scoring questions. It is a free Excel-format call scoring matrix that you can use to score calls and ensure compliance.

The editable feature means you can customise it. Example uses of the form include: as a agent evaluation form, agent coaching form or call quality checklist.

Using this call centre quality scorecard template, you can carry out silent monitoring of your agents, to conduct agent evaluation and active coaching. This is further explained in this article on Call Quality Monitoring.

This is a simple Excel spreadsheet (.xls), containing no macros, and there’s no password needed if you want to change and adapt the form for yourself.

Download the call quality monitoring form

Where Did the Quality Monitoring Form Originate?

The inspiration for the sample call monitoring form came from Jonathan Evans of TNT.

Jonathan Evans

Jonathan was involved with a global project to drive up the quality of calls so that they could deliver consistently high customer service over the phone.

One of the first steps of this project was to record all incoming calls into the business and to monitor five calls per agent per month.

We would like to thank Jonathan for being able to provide us with the original copy of the call scoring matrix. We have been able to add in the functionality to make it much more flexible.

You Will Find 3 Worksheets Inside this Spreadsheet

1. Call Monitoring Form

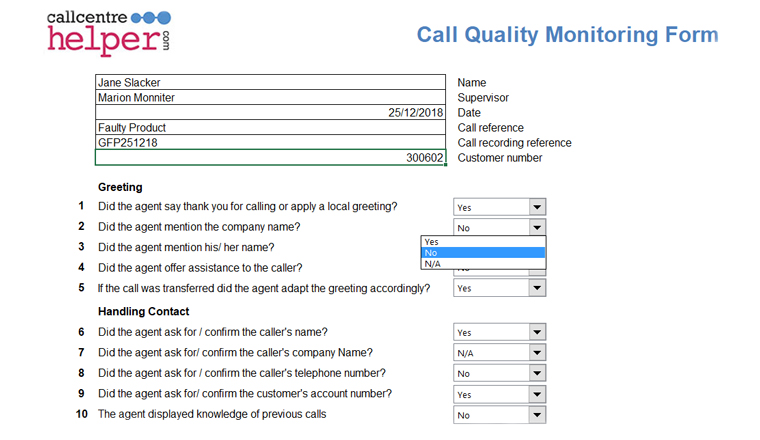

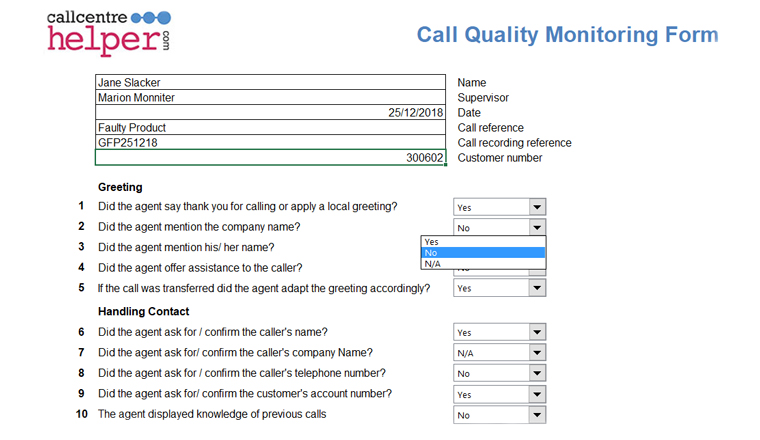

There’s the main “Form” where the questions are answered. Here there’s space for agent details, 10 yes/no-type questions and 10 scoring questions.

All the questions and options used by the form are contained in the worksheet “Options”. Here you can choose the exact wording to appear in each dropdown, e.g. Yes/No could just as easily be True/False. You can set your own questions to be answered by overwriting the example questions and they will automatically pull through on to your form.

This is a filled in example of the call quality monitoring form.

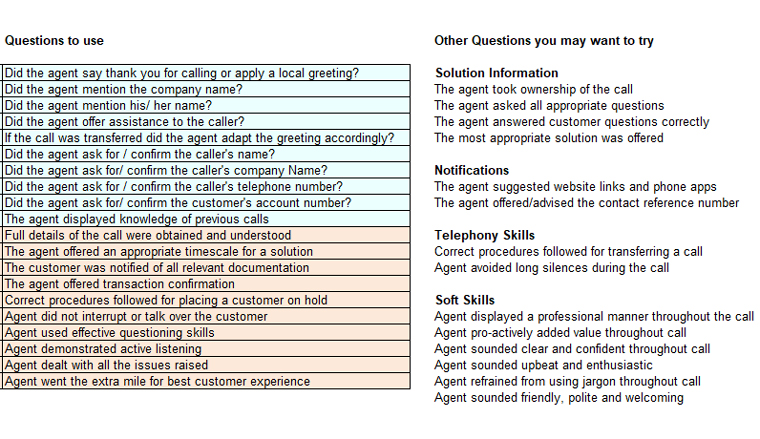

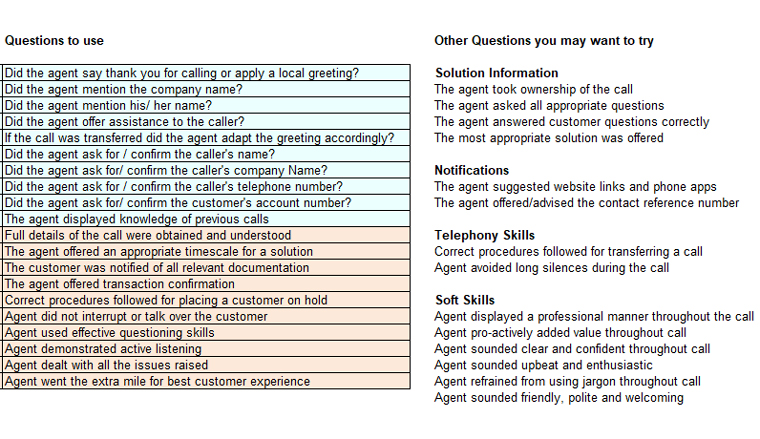

2. List of Quality Questions to Ask Examples

In addition to the examples installed on the form, we have included a list of other questions that we know are popular and that you may want to use. As the questions on the form are answered, scores accumulate to show a final percentage score and a message. You can easily change the thresholds and the message to be displayed.

The second page of the excel document gives example questions that can be used on the call monitoring form.

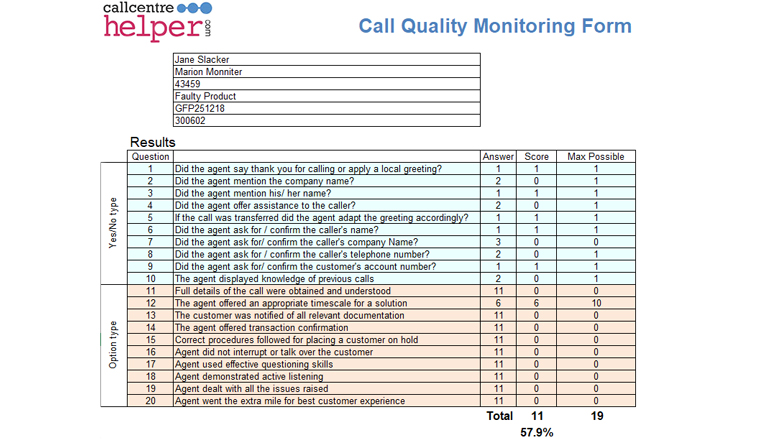

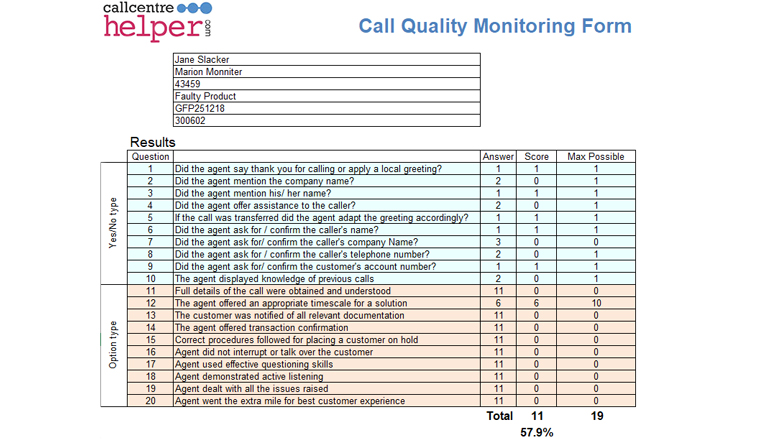

3. Call Scoring Worksheet

If a user determines a question to be ‘not applicable’ then the maximum score for this question is set to 0 so that not answering the question doesn’t affect the final rating, otherwise Yes/No-type questions score 1 for Yes, 0 for No out of a maximum of 1. Rating questions score from 1 to 10 with a max score of 10.

The results page of the call quality form, showing the scores achieved for each question. A percentage score is given at the bottom of the form.

For convenience, the scores are totalled separately on the “Scoring” worksheet. You can either leave this alone, and have a clear view of the current scores, or you can adapt it if you wish so that different scoring rules are applied.

Download the call quality monitoring form

We hope you find this spreadsheet useful and a good start for your own forms. If you do adapt it, please consider sending us a copy so that we can share changes and improvements with the Call Centre Helper Community.

Leave any questions or comments below. We’ll answer them for everyone and make any updates as needed.

Once you have started to use the call quality monitoring, evaluation and coaching form, take a look at this article with 30 Tips to Improve Your Call Quality Monitoring, for useful advice on how to improve quality monitoring in your call centre.

How to Use the Call Monitoring Form

The call monitoring form is based on Microsoft Excel. It is not password protected, so that you are free to change it to fit in with your specific internal requirements. Most of the questions are generic and could apply to most contact centres. You would need to change the “Transaction information” section to fit in with the specific needs of your business.

To vote simply add an “x” into the appropriate column. The numbers of votes are added up at the bottom. The percentage score is the quality score and it represents the total number of Yes votes, compared to the total number of No votes.

It has been designed so that if a section is not applicable to a call (for example in the case of a transfer) then this does not have a negative impact on the quality score.

If you use this call quality monitoring form to audit your staff for training, or evaluating performance, please look at our article on Call Quality Evaluation, for more information on call quality analysis.

Terms and Conditions

Use of the Monitoring Form is subject to our standard terms and conditions.

Download the Quality Monitoring Form

Click here to download the Call Monitoring Form.

We hope you get some benefit from this tool. If you adapt it, for example to add in weightings, please email us a copy so that we can share it with other members of the community.

Author: Jonty Pearce

Reviewed by: Jo Robinson

32 Comments

-

This opens an interesting debate. I think forms that result in percentage scores like this are among the reasons that front line agents are suspicious of coaching and development. The bottom line is, what are you going to do with the “58%”. What does it mean? Once you establish that you are looking trends that can help with development and improve quality, these percentages are meaningless and negative. I would advise dropping the “score” part of the form entirely, and instead, use a “Commitment Box”. Give the agent the opportunity to commit to changing one behaviour, rather than punishing them with a mediocre score like “58%”.

Michael Muldoon

4 Jun at 12:29

-

I would argue that a better solution would be to use fully automated customer surveys where callers score, rather than internal staff. The scores will be from the most important audience, save a lot of time and be more accurate. I would say this as we run such services (www.virtuatel.com) but time and again such services are proven to provide fast, accurate and valuable data, with recorded customer verbatim comments which are excellent for agent training.

If you are interested enough to download this form, you should look at automated surveys!

Alan Weaser

6 Jun at 10:43

-

Unfortunately, customers do not always “KNOW” if they got ‘good’ service when answering a post-call survey. Depends upon technical nature of call, though. On our helpdesk, analysts can tell a pretty tall tale that has nothing to do with the issue at hand or fixing the problem. They’re stalling while they try to figure it out. They sound so knowledgeable too …. unless you know the truth is they don’t have a clue what they’re talking about. I, for one, don’t need my analysts blowing smoke up the customer’s skirts’, but focus on using the knowledgebase to fix the issue rather than finding band-aids, hoping they get someone else next time they call back.

Melissa

14 Jan at 04:06

-

I tend to disagree with some of the above statements – specifically that scores aren’t important. They help benchmark agents and set goals. They are a good gauge of where an agent is at in relation to that goal. If all agents are scored on precisely the same criteria, the ones who are doing a good job will meet or exceed the goal, while the ones who are missing some things will fall beneath it. Further, customer surveys add value, but only when used along with internal scoring. For example, it’s not an accurate reflection of service if the agent was polite and knowledgable, and gave the right information, but the caller was dissatisfied b/c they didn’t get the answer they wanted, and subsequently provides negative feedback. At the same time, you could have an agent who truly doesn’t provide good information or fix the problem, but the caller may have sensed otherwise and give positive feedback about the interaction. Without both being looked at, you are only getting one side of the picture.

J Wayne

31 Mar at 22:11

-

Very very useful form, thanks a lot!

Karine

26 May at 06:53

-

I agree with J Wayne in that internal scoring is still important for staff development and should be used alongside external feedback from customers.

First and foremost the customer’s ‘perception’ of the service is the true scoring factor for the business, so improving external feedback should be the overall target or goal, however I believe that using the areas highlighted by such feedback to customise your internal staff development is also vitally important.

To explain, if your customers are frequently stating that the greeting they get is unprofessional or rushed, then having a tick box score for staff saying, “Did the staff member say hello?” is not going to improve that aspect of the service. Instead the internal scoring should be tailored to mirror the requirements of YOUR customers. Perhaps instead of the above the internal scoring could state, “Did the caller speak clearly, at a sensible speed and provide all greeting information politely, adapting as appropriate?”…this then focuses on training staff to meet the specific needs of YOUR customers and not a generic customer or call.

My main constructive critism of this form is that it weights all the areas equally when your tenants may care more about one part of the service over another. To explain – a lot of industry surveys confirm that callers care more about HOW they are spoken to then WHAT they are told. As such if this is the case for YOUR customers, then the internal scoring should be weighted towards HOW the call was answered, and less about WHAT was said. I would strongly recommend adapting the downloadable form to allow drop down selections for the YES column to be scored out of 10 and for it to be broken down into 2 sections, knowledge skills and vocal skills. This way there is the possibility to weight the two elements based on feedback from YOUR customers and also provide more detailed areas for improvement for your staff (i.e. if they scored YES you may skip over training, but if they scored 3 in the yes column, they could still be targetted and coached to improve this element of the service they provide).

Sorry for the longwinded comment – I should write an article or blog really!! lol

Matthew Hedges

26 May at 11:23

-

I currently use this form, as I believe that it gives you an idea as to what level the agent you are monitoring is at. for instance, I currently lead a team of telesales, all at different levels of ability and therefore, require a different approach when it comes to providing them with the best possible training. This form allows you to highlight the areas of concern but also leaves you open to congratulate the agent on the area’s in which they have improved or are consistent with.

It isn’t perfect in terms of the layout, but thats not anything that can’t be deligated to sort out.

James Roscoe

4 Jun at 11:17

-

I think this form is excellent. It gives you a template that is perfectly open to being tailor made to suit your business needs.

Not every question on the form is relevant, so instead, I replaced them with questions that are.

I think the percentage score is ideal for quickly establishing a bench mark for the member of staff to work with. You can easily identify what needs improving and the whole process of improvement is a lot quicker as they can home in on exactly what specific areas need more focus.

T Davids

23 Jul at 11:43

-

Great info and good comments! Do you have a sample for an outbound sales. Specifically Credit Cards?

Gerry

23 Sep at 01:18

-

all the above comments seem all true but how do you judge calls that are linked to the employers bonus? is it fair to leave that in the ends of the customers? or the internal call markers? discuss

will hudson

27 Sep at 16:17

-

There is always an intersting debate which occurs when scores are attributed to anything we do; particularly when score are linked to potential bonuses.

In my experience what is done with the information is the most important factor. Firstly, I have always approached the concept of scoring calls from a perspective of “everything is right, until something is done wrong”. Looking at the scorecard we have here, I would make one small modification, that is to add the N/A value into the numerator and denominator. (Yes+NA) / (Yes+NA=No) the result becomes 67%.

Essentially each call, from a quality stand point needs to have a standard benchmark regardless of call type. An agent cannot control the N/A portion of their interaction, so why remove these from the formula? If quality is the reason behind the scorecard, then the quailty expected should be consistent regardless.

The positives from the call are used to re-inforce and recognise displayed behaviour and the negatives, or no’s are used as coaching opportunities. I have found in the past that is the fairest approach.

Also using a great sample side within your own team can give a clearer ability to select the expected result. (90% should not be selected because it sounds great). Is should be more around the team ability to achieve. Once the team starts to achieve their ‘target’ the target can be gradually lifted to improve performance for all.

Benjamin

1 Oct at 00:33

-

Kindly help me in getting call evaluating sheet. If possible kindly send it to my e mail address.

Vijay

12 Dec at 15:05

-

wow, something free what a wonderful idea. I have a very young call center. Having managed a 500 seat established call center in the past, I dan see both sides of the arguement. From my perspective now, this is a terrific start. When you have young inexperienced reps, having something simple and very direct is of more value than anything much more complex. So, thank you. As for the feedback from the customer, keep in mind that however you do that, your responses are going to be tainted. More customers that are upset for ANY reason including non that involve the rep are much more likely to take the time to let their feelings be known by participating in this type of survey. It’s a good baseline, but not a good way to hold someone ultimately accountable, unless there aer multiple disturbing results. Just my opinion.

Mike Gilpatrick

12 Jan at 00:15

-

Really appreciated for the form and all your comments. We are thinking of a call monitoring program, really great to see your ideas above. Thank you.

Barbara Wong

20 Jan at 05:40

-

I would love to see additional examples of monitor forms used within companies today. If you could send me any forms you run across and let me know the company they came from, that would be great!

Katt

18 Apr at 18:28

-

Thank you for the form, it was very useful. This type of free information exchange is really very helpful.

Rahul

27 Jul at 07:57

-

This is amazing thank you so much for the monitoring sheet. It is awesome 🙂

Sowmya

30 Sep at 21:29

-

Can sumone pls forward me evaluation sheet for outbound ..

Shirin

31 Mar at 18:44

-

Hi, I was also trying to update an old form. Your example and comments are most helpful, as I am a very young manager, in a learning courve(Poland).

I will read more on your website. Thank you for everything:)

Helena

17 May at 13:12

-

Scores are a useful benchmark and trends are important to look at on a criteria level as well to drive training and coaching objectives. The important thing to do is to ensure you have a sufficient sample size for an agent and a centre so that your scores (averages) are meaningful. If you assess 5 calls/month and an agent is handling 500 calls/month then you’re assessing only 1% of calls and your confidence interval is +/- 45%. You can’t really rely on data that varied for trends. Goood for individual coaching perhaps.

Alex

23 May at 08:18

-

Thank you very much for the useful tool!

fbaker

2 Jan at 21:02

-

Love the form! I’m a CSR manager looking for a easy template to build off of, yours worked perfectly… Thanks for sharing…

David

24 Jan at 22:58

-

I would like to know if there are any auditing forms for ticket lifecycle.Like checking the status of the ticket,workinfo ,sla etc

Anonymous

24 Apr at 08:19

-

Has anyone else noticed that the tally of the calls isnt very accurate? It counts the yes answers and assumes they’re all positive. Yet answering “yes” to “did the agent talk over the customer” would be a negative, wouldn’t you think? Be sure to word all your questions to have the “yes” answer be the positive answer or you’re throwing off the score.

Drb

24 Apr at 19:51

-

does anyone have a policy that goes witht the form?

jolene hoffman

21 Jun at 13:28

-

I was wondering, does anyone have an evaluation form for chats and e-mails that they would be able to share with me?

Raj

25 Jul at 13:14

-

Hi

thank you very much for your good job,I have a question ,let me know I have given this form to 4 separate person to evaluate the agents here,3 points,10 = Absolutely Outstanding, 5 = Satisfactory but room for improvement.

1 = Totally unacceptable are clear for do scoring but between these points are not clear in do score.I mean what is the same criteria for scoring.thanks

farshid

15 Oct at 12:23

-

Hi I have a agents close to 20. I need to monitor all their calls and have to score them, Kindly help me with the report to score all the agents on all the parameters.

mythili

8 Aug at 19:32

-

Hi

Thanks for the form, is there any criteria available for behaviours that equate to each of the scores i.e. what behavior would you expect to see for the CSR to score a 10 under the category The agent took ownership of the call, what equals 5 etc.

Would like to ensure this is not to subjective – Cheers Kath & Donna

kath.

21 Jul at 03:36

-

This is very helpful tools for call center quality call monitoring. Great job.

Anna

5 May at 07:41

-

Hi There! When creating a quality form, how do you distribute the points per parameter?

Arvin

20 May at 00:11

-

There is no right and wrong answer for this. Sometimes it can be a pass/ fail, sometimes it is on the basis of points.

Jonty Pearce

25 May at 15:14

Get the latest call centre and BPO reports, specialist whitepapers and interesting case-studies.

This opens an interesting debate. I think forms that result in percentage scores like this are among the reasons that front line agents are suspicious of coaching and development. The bottom line is, what are you going to do with the “58%”. What does it mean? Once you establish that you are looking trends that can help with development and improve quality, these percentages are meaningless and negative. I would advise dropping the “score” part of the form entirely, and instead, use a “Commitment Box”. Give the agent the opportunity to commit to changing one behaviour, rather than punishing them with a mediocre score like “58%”.

I would argue that a better solution would be to use fully automated customer surveys where callers score, rather than internal staff. The scores will be from the most important audience, save a lot of time and be more accurate. I would say this as we run such services (www.virtuatel.com) but time and again such services are proven to provide fast, accurate and valuable data, with recorded customer verbatim comments which are excellent for agent training.

If you are interested enough to download this form, you should look at automated surveys!

Unfortunately, customers do not always “KNOW” if they got ‘good’ service when answering a post-call survey. Depends upon technical nature of call, though. On our helpdesk, analysts can tell a pretty tall tale that has nothing to do with the issue at hand or fixing the problem. They’re stalling while they try to figure it out. They sound so knowledgeable too …. unless you know the truth is they don’t have a clue what they’re talking about. I, for one, don’t need my analysts blowing smoke up the customer’s skirts’, but focus on using the knowledgebase to fix the issue rather than finding band-aids, hoping they get someone else next time they call back.

I tend to disagree with some of the above statements – specifically that scores aren’t important. They help benchmark agents and set goals. They are a good gauge of where an agent is at in relation to that goal. If all agents are scored on precisely the same criteria, the ones who are doing a good job will meet or exceed the goal, while the ones who are missing some things will fall beneath it. Further, customer surveys add value, but only when used along with internal scoring. For example, it’s not an accurate reflection of service if the agent was polite and knowledgable, and gave the right information, but the caller was dissatisfied b/c they didn’t get the answer they wanted, and subsequently provides negative feedback. At the same time, you could have an agent who truly doesn’t provide good information or fix the problem, but the caller may have sensed otherwise and give positive feedback about the interaction. Without both being looked at, you are only getting one side of the picture.

Very very useful form, thanks a lot!

I agree with J Wayne in that internal scoring is still important for staff development and should be used alongside external feedback from customers.

First and foremost the customer’s ‘perception’ of the service is the true scoring factor for the business, so improving external feedback should be the overall target or goal, however I believe that using the areas highlighted by such feedback to customise your internal staff development is also vitally important.

To explain, if your customers are frequently stating that the greeting they get is unprofessional or rushed, then having a tick box score for staff saying, “Did the staff member say hello?” is not going to improve that aspect of the service. Instead the internal scoring should be tailored to mirror the requirements of YOUR customers. Perhaps instead of the above the internal scoring could state, “Did the caller speak clearly, at a sensible speed and provide all greeting information politely, adapting as appropriate?”…this then focuses on training staff to meet the specific needs of YOUR customers and not a generic customer or call.

My main constructive critism of this form is that it weights all the areas equally when your tenants may care more about one part of the service over another. To explain – a lot of industry surveys confirm that callers care more about HOW they are spoken to then WHAT they are told. As such if this is the case for YOUR customers, then the internal scoring should be weighted towards HOW the call was answered, and less about WHAT was said. I would strongly recommend adapting the downloadable form to allow drop down selections for the YES column to be scored out of 10 and for it to be broken down into 2 sections, knowledge skills and vocal skills. This way there is the possibility to weight the two elements based on feedback from YOUR customers and also provide more detailed areas for improvement for your staff (i.e. if they scored YES you may skip over training, but if they scored 3 in the yes column, they could still be targetted and coached to improve this element of the service they provide).

Sorry for the longwinded comment – I should write an article or blog really!! lol

I currently use this form, as I believe that it gives you an idea as to what level the agent you are monitoring is at. for instance, I currently lead a team of telesales, all at different levels of ability and therefore, require a different approach when it comes to providing them with the best possible training. This form allows you to highlight the areas of concern but also leaves you open to congratulate the agent on the area’s in which they have improved or are consistent with.

It isn’t perfect in terms of the layout, but thats not anything that can’t be deligated to sort out.

I think this form is excellent. It gives you a template that is perfectly open to being tailor made to suit your business needs.

Not every question on the form is relevant, so instead, I replaced them with questions that are.

I think the percentage score is ideal for quickly establishing a bench mark for the member of staff to work with. You can easily identify what needs improving and the whole process of improvement is a lot quicker as they can home in on exactly what specific areas need more focus.

Great info and good comments! Do you have a sample for an outbound sales. Specifically Credit Cards?

all the above comments seem all true but how do you judge calls that are linked to the employers bonus? is it fair to leave that in the ends of the customers? or the internal call markers? discuss

There is always an intersting debate which occurs when scores are attributed to anything we do; particularly when score are linked to potential bonuses.

In my experience what is done with the information is the most important factor. Firstly, I have always approached the concept of scoring calls from a perspective of “everything is right, until something is done wrong”. Looking at the scorecard we have here, I would make one small modification, that is to add the N/A value into the numerator and denominator. (Yes+NA) / (Yes+NA=No) the result becomes 67%.

Essentially each call, from a quality stand point needs to have a standard benchmark regardless of call type. An agent cannot control the N/A portion of their interaction, so why remove these from the formula? If quality is the reason behind the scorecard, then the quailty expected should be consistent regardless.

The positives from the call are used to re-inforce and recognise displayed behaviour and the negatives, or no’s are used as coaching opportunities. I have found in the past that is the fairest approach.

Also using a great sample side within your own team can give a clearer ability to select the expected result. (90% should not be selected because it sounds great). Is should be more around the team ability to achieve. Once the team starts to achieve their ‘target’ the target can be gradually lifted to improve performance for all.

Kindly help me in getting call evaluating sheet. If possible kindly send it to my e mail address.

wow, something free what a wonderful idea. I have a very young call center. Having managed a 500 seat established call center in the past, I dan see both sides of the arguement. From my perspective now, this is a terrific start. When you have young inexperienced reps, having something simple and very direct is of more value than anything much more complex. So, thank you. As for the feedback from the customer, keep in mind that however you do that, your responses are going to be tainted. More customers that are upset for ANY reason including non that involve the rep are much more likely to take the time to let their feelings be known by participating in this type of survey. It’s a good baseline, but not a good way to hold someone ultimately accountable, unless there aer multiple disturbing results. Just my opinion.

Really appreciated for the form and all your comments. We are thinking of a call monitoring program, really great to see your ideas above. Thank you.

I would love to see additional examples of monitor forms used within companies today. If you could send me any forms you run across and let me know the company they came from, that would be great!

Thank you for the form, it was very useful. This type of free information exchange is really very helpful.

This is amazing thank you so much for the monitoring sheet. It is awesome 🙂

Can sumone pls forward me evaluation sheet for outbound ..

Hi, I was also trying to update an old form. Your example and comments are most helpful, as I am a very young manager, in a learning courve(Poland).

I will read more on your website. Thank you for everything:)

Scores are a useful benchmark and trends are important to look at on a criteria level as well to drive training and coaching objectives. The important thing to do is to ensure you have a sufficient sample size for an agent and a centre so that your scores (averages) are meaningful. If you assess 5 calls/month and an agent is handling 500 calls/month then you’re assessing only 1% of calls and your confidence interval is +/- 45%. You can’t really rely on data that varied for trends. Goood for individual coaching perhaps.

Thank you very much for the useful tool!

Love the form! I’m a CSR manager looking for a easy template to build off of, yours worked perfectly… Thanks for sharing…

I would like to know if there are any auditing forms for ticket lifecycle.Like checking the status of the ticket,workinfo ,sla etc

Has anyone else noticed that the tally of the calls isnt very accurate? It counts the yes answers and assumes they’re all positive. Yet answering “yes” to “did the agent talk over the customer” would be a negative, wouldn’t you think? Be sure to word all your questions to have the “yes” answer be the positive answer or you’re throwing off the score.

does anyone have a policy that goes witht the form?

I was wondering, does anyone have an evaluation form for chats and e-mails that they would be able to share with me?

Hi

thank you very much for your good job,I have a question ,let me know I have given this form to 4 separate person to evaluate the agents here,3 points,10 = Absolutely Outstanding, 5 = Satisfactory but room for improvement.

1 = Totally unacceptable are clear for do scoring but between these points are not clear in do score.I mean what is the same criteria for scoring.thanks

Hi I have a agents close to 20. I need to monitor all their calls and have to score them, Kindly help me with the report to score all the agents on all the parameters.

Hi

Thanks for the form, is there any criteria available for behaviours that equate to each of the scores i.e. what behavior would you expect to see for the CSR to score a 10 under the category The agent took ownership of the call, what equals 5 etc.

Would like to ensure this is not to subjective – Cheers Kath & Donna

This is very helpful tools for call center quality call monitoring. Great job.

Hi There! When creating a quality form, how do you distribute the points per parameter?

There is no right and wrong answer for this. Sometimes it can be a pass/ fail, sometimes it is on the basis of points.