Calibrating quality scores improves the contact centre environment by reducing bias and enhancing fairness, creating a more consistent customer experience across the board.

Here’s a straightforward guide to help you establish a clear, effective process for calibrating quality scores in your contact centre.

Why Calibrate Quality Scores?

While quality scores are often just seen as a method of measuring advisor performance, they are also a tool to ensure fairness and consistency, by removing any perceived bias and preventing the perception of inequality.

By following a standardized approach, you prevent subjective judgments from affecting results, which keeps evaluations fair for all advisors.

For example, by refining quality monitoring, you can remove conversations about the process not being fair such as “well … also forgot to repeat the customer’s name and they didn’t get marked down.”

Calibration also creates consistent service across your organization and validates your contact centre’s standards and procedures.

A 7-Step Process for Calibrating Quality Scores

This step-by-step process covers everything from assigning roles to holding calibration sessions.

It’s designed to create a reliable, consistent approach to quality that everyone in your team can understand and follow.

Step 1 – Assign a Quality Process Leader

To ensure successful calibration, appoint an overall leader responsible for the process. This person will make the final decisions about which elements of the call are assessed, which helps avoid ongoing debates and ensures consistency.

A single leader prevents disagreements from stalling progress and keeps the calibration process aligned with company standards and goals.

As Tom Vander Well explains “I think that one mistake in calibration is that there is no clear authority to make the decision.

Say there are two different views on the same situation, how should we assess one particular behaviour in certain situations? Is this the decision that we’re making because it fits our brand? Or is it part of our strategic plan?

So, there might need to be someone holding people accountable to that standard. I feel often that calibration, it just becomes an ongoing debate with no resolution.”

Step 2 – Define Quality Standards

Determine the standards you want your team to meet. You could aim for a high bar to encourage advisors to improve, or keep it simpler to help boost morale and show advisors they’re doing well.

For instance setting a simpler standard – where advisors achieve 100%, 90% of the time – provides advisors with evidence that they are doing a good job and may boost morale.

Decide on what “quality” means for your centre—whether it’s about quick resolutions, soft skills, or reduced customer effort—so your team understands the targets.

Step 3 – Identify Key Behaviours to Target

Once you’ve set call quality standards, identify the specific behaviours you want to assess. Common targets include First Contact Resolution (FCR), courtesy and friendliness, and efficiency.

These behaviours typically improve the customer experience and align with the goals of most contact centres, though centres in certain sectors for example in financial sectors, must also target verification and compliance.

Step 4 – Define a Purpose for Quality Targets

Discuss with your team what each targeted behaviour means for customers, colleagues, and the contact centre as a whole.

This ensures everyone understands why these behaviours are important and clarifies what each one looks like in action.

A shared understanding of the purpose behind each target makes it easier for everyone to assess quality in the same way.

For example, if everybody involved in the process can agree on why FCR is important, and why they are targeting advisors on it, it is easier to come to an agreement as to what is considered FCR and the behaviours to look out for.

For the identified behaviours Ian Robertson suggests asking:

- What do the behaviours mean for our customers?

- What do the behaviours mean for our colleagues?

- What do the behaviours mean for our company/organisation?

By doing so, Ian believes “you can be objective and focused on the outcome, which should make it much easier to reach agreement.”

Step 5 – Create a Quality Scorecard

Develop a scorecard that outlines the specific elements of each target behaviour. For example, if you’re assessing hold etiquette, you might score advisors on whether they ask permission to put a caller on hold and thank them for holding.

Everybody involved in the process should discuss the elements of each behaviour and together create a unique scorecard that the leader considers aligns with business goals.

By defining each behaviour with concrete criteria keeps assessments consistent and gives advisors clear, measurable goals to aim for.

For example, to target the soft skill of “hold etiquette”, Tom Vander Well recommends the criteria could be:

- Does not mute or leave caller in silence instead of placing caller on hold

- Seeks caller’s permission to place him/her on hold

- Thanks caller for holding when returning to the line

- Apologises for wait on hold if length exceeds 30 seconds

Whereas for the vaguer topic of call resolution, scorecard elements could include:

- Makes complete effort to provide resolution for caller’s question(s)

- Offers call-back if wait time will/does reach 3 minutes

- Provides time frame for call-back or follow-up correspondence

- Confirms phone number for call-back

Step 6 – Document Principles to Keep Scoring Consistent

According to Tom Vander Well “many calibration sessions turn into a war over a small piece of one call because of this. I found myself always asking: ‘what’s the principle we can glean from this discussion that will help us be more consistent in scoring all of our calls?’”

This means that sometimes, even with a scorecard, analysts may interpret things differently. To address this, Tom suggests contact centres create a “Calibration Monitor” document.

This document summarizes agreed-upon principles to ensure everyone scores consistently.

By having these guiding principles in writing, you can separate subjective judgments from objective criteria, which helps prevent minor differences from turning into major disagreements.

Step 7 – Hold Regular Calibration Sessions

Gather your analysts periodically to score calls together and discuss their evaluations. This helps them align their scoring approaches and smooth out any inconsistencies.

Tom Vander Well explains that these sessions involve getting “all analysts in a room and taking one phone call. Each analyst scores the call and then everyone comes together and compares the results.

This brings out the differences in the scoring, as a debate will ensue about how to align these things correctly.

It is very healthy and positive, whilst allowing the leader to manage and say, ‘nope, this is the way we’re going to do it. I want everybody to look at it this way moving forward.’”

This helps to resolve any differences in interpretation and makes scoring more consistent, by ensuring that everyone follows the same standards.

It’s also useful to rotate the types of calls analysed in these sessions, such as customer service versus sales calls, to cover a range of situations and avoid confusion.

Moving Beyond Calibration Sessions to Track Consistency

After calibration sessions, track the scoring patterns of each analyst. Record their scores over time, then look for any significant deviations from the team’s average.

You need to do this as Tom Vander Well explains, certain individuals will do two things:

“One, they’ll say one thing in the calibration session because they know that’s what the leader wants to hear, but then when they go back and analyse the calls, they continue to score it the way they believe it should be done.

The second thing that I see happen is that analysts will score the call that they know is going to be calibrated one way because they know they’re going into a calibration session.

So, the analyst may think ‘I’m going to score it this way because I know that’s what’s going to be acceptable in the calibration session’. But they may continue to score actual calls, which they don’t think they’ll necessarily be held accountable for, in another way.”

So, if one analyst scores lower than others on a specific behaviour, talk to them to find out why. Sometimes, an analyst’s perspective can reveal a more accurate standard that the whole team should consider.

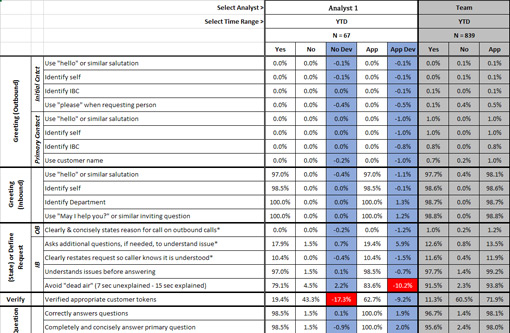

How to Compare Scores From Different Analysts to Look for Deviations

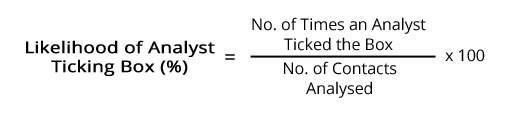

After 50+ calls have been scored by each analyst, it’s time to record the scorecards for each contact analysed in a program such as Microsoft Excel to find a percentage likelihood of the analyst ticking a certain box.

Calibration variance for example can be worked out with the following formula:

Likelihood of Analyst Ticking Box (%) = Number of times an analyst ticked the box ÷ Number of Contacts analysed x 100

Then, compare the percentage of one analyst against the team, for each element, and look for deviations. In the example below, provided by c wenger group, they are looking for deviations of plus or minus 10%.

From this graphic, the analyst “Analyst 1” can be seen to be 10.2% less likely than the rest of the team to note that an advisor tried to “Avoid ‘dead air’”.

So, taking this information into consideration, the team leader can take “Analyst 1” aside and ask them to be slightly more lenient when judging whether dead-air time exceeds seven seconds (unexplained) or 15 seconds (explained).

However, be careful when doing this, as Tom warns that “sometimes the person who looks like they’re the outlier is actually the one who’s being more accurate than rest of the team. But it allows us to have the conversation with them and dig into why there is a difference.”

Common Mistakes to Avoid During Call Quality Calibration

Here are five key mistakes to steer clear of when calibrating call quality scores:

1. Creating a Combined Scorecard in Calibration Sessions

Avoid scoring calls as a group in real-time during calibration sessions. Instead of scoring calls separately and then coming together as a group, some teams all get together in one room and listen to a call, going through the scorecard together, item-by-item and take a vote or discuss it.

However, this can lead to stronger voices dominating the process, leaving quieter members hesitant to share their views. This approach can reduce the effectiveness and fairness of quality scoring.

Tom Vander Well adds that he has “found this to probably be the least effective method simply because it goes back to sort of the rules of the playground and the people who have the loudest voices and the strongest opinions dictate the conversation, whilst people who have different opinions but are afraid of speaking out keep quiet, and I think it has limited impact. Sometimes, this can even have a negative influence on quality scoring.”

2. Making Assumptions Based on Small Sample Sizes

Be cautious when interpreting data from small sample sizes. If an analyst has only reviewed a few calls, their scores might look inconsistent with the team’s.

Tom Vander Well explains that “sometimes, depending on your sample sizes, you have to be very careful with the data, because it may look like one person may be scoring completely differently from the rest of the team.

But, depending on how many calls you and the team are looking at, and the types of calls that they’re scoring or taking, it may be perfectly justified.”

For example, if an analyst has only examined ten calls in a week, do not make any quick assumptions based on deviations. Yet, if they have analysed 50 or more, that will more than likely give enough data to examine.

3. Rushing Through Call Analysis

Deadlines can make it tempting to speed through call analysis, but rushing leads to mistakes.

Take the time to listen closely and score carefully. Missing details because of a rushed review can hurt the accuracy and usefulness of your calibration process.

Tom Vander Well believes that most mistakes during the calibration process are honest ones, and from experience thinks that “one of the biggest problems I find is that there’s a deadline by which I have to have all my calls analysed, and due to human nature being human nature, I wait to the last minute.

Then, all of a sudden, I’ve got to have 40 calls analysed by the end of the day and so I go in and I basically do it as quickly as possible, and don’t take the time to really listen and analyse well and I make mistakes.”

4. Failing to Define When Certain Criteria Apply

Misunderstandings can happen if analysts don’t know when specific scoring criteria apply.

For example, an advisor may not apologize during a callback if the customer seems calm, but the delay itself might merit an apology, as Tom explains:

“Making an apology is a good example for when things haven’t met the caller’s expectations. In one instance, an advisor may say, ‘well, the customer called and left the message that they needed this’.

So, now the advisor has to call back, and after they’ve done so, they may say, ‘well, the customer didn’t seem upset at all and they weren’t yelling or screaming at me, so I didn’t see the need to apologise.’

Yet, we know from research that resolving issues quickly is a key driver of satisfaction and the fact that the contact centre was not there to answer the phone in the first place, and they had to wait for a call-back, seems enough of a reason to apologise.”

To ensure consistency, include guidelines specifying when each behaviour (like apologizing) is expected.

5. Excluding Advisors from Calibration Sessions

Including one or two advisors in calibration sessions helps them understand the scoring criteria, how to improve their quality scores, and of what is expected from them.

You might even ask advisors to complete a self-evaluation beforehand, allowing them to reflect on their calls and discuss areas for improvement.

For more advice on putting together a great quality programme, read our articles:

- How to Create a Contact Centre Quality Scorecard – With a Template Example

- Call Centre Quality Assurance: How to Create an Excellent QA Programme

- Call Centre Quality Parameters: Creating the Ideal Scorecard and Metric

Author: Robyn Coppell

Published On: 7th Jun 2017 - Last modified: 8th Nov 2024

Read more about - Customer Service Strategy, Call Quality, Editor's Picks, Quality, Scorecard, Tom Vander Well