In this article, we note some of the key things that contact centres must do when assessing quality on live chat and email.

Make Sure That Quality Scores Are Relative to Customer Satisfaction

In theory, there should be a close relationship between quality scores and CSat in the contact centre, because quality scores should be telling the organisation exactly what their customers are saying/feeling.

This is according to Neil Martin, the Creative Director at The First Word; however, the reality appears to be quite different.

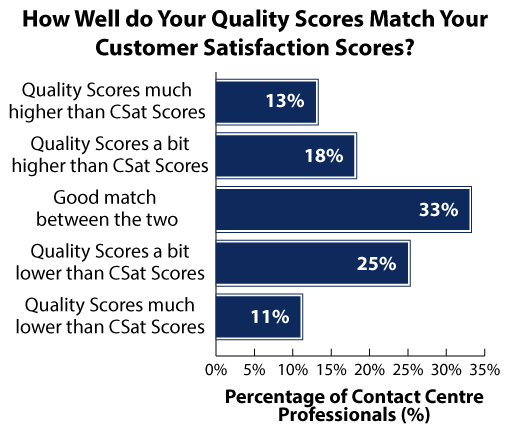

When we asked 194 contact centre professionals about how well their quality scores correlated with their CSat results, less than a third (33%) said that there was a “good match between the two”.

Comparing quality scores with customer satisfaction is important, and if there is little correlation between the two, it indicates the contact centre’s quality assessment isn’t working as well as it could be.

While it is important to ensure that the things that are important to the business, such as compliance, are included on a quality scorecard, it is important to survey customers before creating one. This enables the contact centre to focus their scorecard on what matters most to the customer.

For more on surveying customers to build a scorecard: Quality Parameters: Building the Ideal Quality Scorecard and Metric

The Wrong Way to Measure Quality

Take the following example of an email sent from a rail company which shall remain nameless.

It was concerning to learn of the circumstances which prompted your contact to this office.

To reduce fare evasion, it is our policy that all passengers must be in possession of a valid ticket to travel before they board our trains.

Whilst it does say in the National Conditions of Carriage that it’s a customer’s responsibility to check their tickets, occasionally mistakes occur and realistically the error was caused by ourselves. A cheque to the value of £30 is enclosed to cover the cost of the ticket.

It was common practice for the rail company to assess an email like this by using a traffic light system. This system worked so that green = customer feels happy and confident, amber = customer not satisfied and red = customer annoyed and at risk of contacting us again.

Surprisingly, the rail company scored this email “green”, something that Neil Martin questions.

Instead Neil thinks that this should score amber or possibly red, because: “There’s no real empathy here and the jargon makes it sound like a terms and conditions page. And, even though the customer gets £30, it looks quite a begrudging gesture, which should be said before the last sentence.”

The contrast in how Neil and the railway company scored this call signals that there is a big difference between the way that the organisation assesses its own emails and the way its customers think.

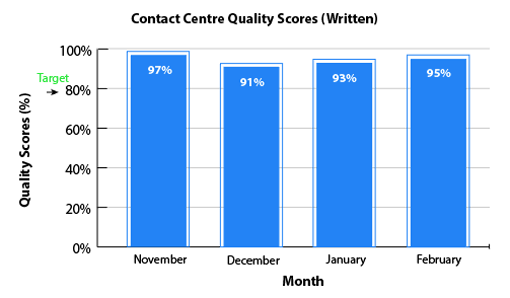

After reading this email, and others like it, Neil asked to see the railway company’s quality scores to see how they were doing. The results from November-February are highlighted below.

This chart indicates that the organisation’s advisors are scoring really well, with their 80% advisor target being exceeded by at least 10% each month.

So, it would be expected that everything was going brilliantly and that emails/live chats are doing everything that customers want.

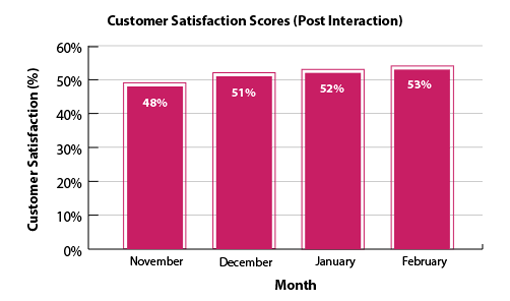

But then Neil asked to see the contact centre’s CSat scores, so he could compare them. The graph below highlights the CSat scores for the same period as in the graphic above.

So, CSat scores were about half of what the contact centre’s quality scores were. Also, during the month of November, quality scores were at their highest, when the corresponding CSat score was at its lowest.

This highlighted that the company were telling themselves a different story to the one their customers were telling them.

What Are the Problems With Quality Assessment?

While quality assessment is a great exercise to do, it does often go wrong and can lead to results like those of the railway company in the example above.

When quality assessment goes wrong, it will likely be down to the scorecard being:

- Vague

- Subjective

- Generic

- Not linked to coaching

- Too long

- Confusing

- Difficult

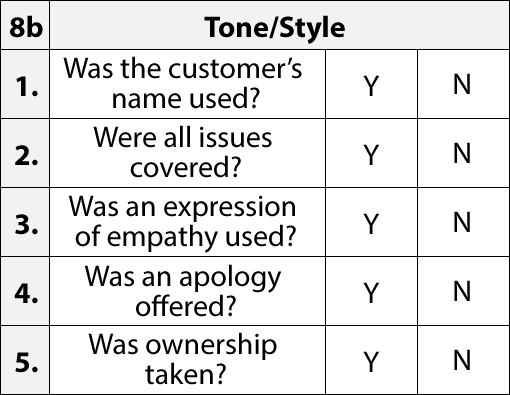

What this all comes down to is asking the right questions. For instance, the example below is a typical quality assessment framework for tone and style on email and live chat.

A typical quality framework – There are better questions to ask!

On the surface, these all sound like sensible questions, all things that we would like to see in a standard live chat or email interaction.

But there is a problem with this set of questions. Neil Martin highlights this issue by getting contact centres to ask themselves: “could a robot pass your quality assessment?”

Neil poses this question to contact centres because “if you’ve got human beings writing to your customers, you don’t want it that a robot could actually do their job. Therefore, a robot shouldn’t be able to pass your quality assessment.”

Could a Robot Pass Your Quality Assessment?

The aim of quality on email and live chat should be to form criteria that ask for human emotion to be involved, to evoke the right feelings in customers.

To make this point, let’s go back to the rail industry. Here’s an example of an email that was sent to a rail company.

Dear Sir / Madam

I am writing to complain about my recent journey from X to Y.

There was no trolley service on the train. London to Darlington is three hours and that’s a long time without a cup of tea! Especially after I’d made a journey to X in the snow.

That was bad enough but the main reason I’m writing to complain is the 50-minute delay to the service. It meant that I missed my connecting train and had to stand outside for another 45 minutes while I waited for the next one. This was very frustrating and turned what should be an easy journey into an uncomfortable one – I’m 72 and my knees do not like being in the cold!

Mrs Smith

So, Mrs Smith clearly hasn’t had the greatest customer experience. Yet, a basic robot would likely respond in the following way.

Hi Pamela,

I am sorry you felt the need to complain.

Your journey has been reviewed and a trolley service was not operated on this service due to a staff shortage.

The delay was due to a slow running rolling stock ahead of the train.

I note your dissatisfaction with the service received and apologise for any inconvenience caused. In recognition of the delay to this service, please find enclosed rail vouchers to the value of £50.00.

Yours faithfully,

Robot

Unsurprisingly, Neil is not a big fan of this response, saying that “we have £50, which we can be happy about, but it doesn’t appear until the end.”

“Also, there are lots of stock phrases in here, no real empathy and a robot really could write this response.”

“Basically, it’s a generic cut and paste job, so Mrs Smith is not going to be happy with this and our quality assessment shouldn’t be either.”

However, let’s go back to the five questions that were highlighted earlier, taken from a genuine email/live chat evaluation form, to see if this “robotic” reply passes the quality test.

1. Was the customer’s name used?

Yes – While the robot should have used the customer’s preferred names, Mrs Smith’s first name (Pamela) was used.

2. Were all issues covered?

Yes – The robot has noted both the train delay and trolley service issue in its response. However, whether the robot covered them in the way Mrs Smith would have wanted them to is another question.

3. Was an expression of empathy used?

Yes – The robot does well to use an empathy statement at the beginning of the email, immediately demonstrating that the customer has been listened to by using the phrase “I’m sorry you felt…”. However, this statement is questionable in its sincerity.

4. Was an apology offered?

Yes – Alongside the empathy statement at the beginning of the email should be an apology. Yet, in this example, the robot does “apologise for any inconvenience caused” further on. So, on the basis of this criteria, it still counts.

5. Was ownership taken?

Yes – The key to taking ownership of a customer’s problem is to use personal pronouns, such as “I”. In this example, the advisor did so on a couple of occasions.

On the basis of this criteria, the robot passed the quality test, meeting all five items on the scorecard. This demonstrates the need to ask the right questions.

Better Questions to Ask

No matter the channel, the trick when you’re looking for these human elements, and you want to make sure that you score them consistently and accurately, is to ask better questions.

So, taking the five examples highlighted above, let’s reword them in order to get the most out of having a human advisor. Neil Martin’s suggestions for rewording these questions are highlighted below.

| Instead of… | Ask… |

|---|---|

| Was the customer’s name used? | Did you address the customer how they would like to be addressed? |

| Were all issues covered? | Did you answer all the points in order of importance to the customer? |

| Was an expression of empathy used? | Did you show empathy by reflecting back their experience? |

| Was an apology offered? | If we made a mistake, did you say sorry sincerely upfront? |

| Was ownership taken? | Did you take ownership for what you did? |

So, using this new set of questions, let’s go back to the robot’s response to Mrs Smith, in order to see if it would still score five out of five on the new quality scorecard.

1. Did you address the customer how they would like to be addressed?

No – The customer signed off as Mrs Smith, which is her signal as to how she would like to be addressed. Yet the robot used her first name.

2. Did you answer all the points in order of importance to the customer?

No – The more important of the two issues that were raised by Mrs Smith was the delay. While it is nice to have a “cup of tea” on the train, it was not the most significant problem. However, the robot highlighted the trolley service incident first.

3. Did you show empathy, reflecting back the customer?

No – Technically, the robot did use a generic expression of empathy. However, the empathy phrase used did not reflect back on the customer missing their connecting train, or the fact that Mrs Smith is a 72-year-old whose “knees do not like being in the cold”.

4. If we made a mistake, did you say sorry sincerely?

No – While an apology is offered, it is right at the bottom of the robot’s email and the phrase “any inconvenience” really undermines it. Without referring back to the customer’s problem, an apology lacks authenticity.

5. Did you take ownership for what you did?

No – While the word “I” is used in the robot’s response, there isn’t any ownership. For example, in the robot’s response it says, “Your journey has been reviewed and a trolley service was not operated…”, but who reviewed it? Who didn’t operate it?

So, with this set of questions, did the robot pass? No! In fact, it scored zero out of five.

Even though the same issues were covered (i.e. covering the right questions and showing empathy), by asking better questions, the email has now been given the score it really deserves.

Applying the Scorecard Effectively

Now that the contact centre has assessed if it is asking the right questions, Neil Martin makes an important final point, about applying these new-found questions effectively.

Neil Martin

Neil says: “Quality assessment is not all about writing something down and producing a scorecard, it includes training your people to be able to apply it and ask the right questions.”

“If you train call analysts in this way, they know what they’re looking for and they can explain it to their team.”

“So, it might be your team managers who do quality assessment, it might be ‘champion advisors’, but whoever does it, make sure that they really understand what they’re looking for.”

To ensure everyone has the same understanding of how to score advisors, calibration is important. For advice on how to do this, read our article: How to Calibrate Quality Scores

Follow the link to download our own free example of a Free Call Monitoring Form

Thanks to Neil Martin for his help in putting this article together. For more from Neil, read our article: 10 Best Practices to Improve Live Chat

Author: Neil Martin

Reviewed by: Megan Jones

Published On: 16th May 2018 - Last modified: 14th Aug 2025

Read more about - Customer Service Strategy, Editor's Picks, Email, Empathy, Live Chat, Metrics, Neil Martin, Quality, Service Strategy